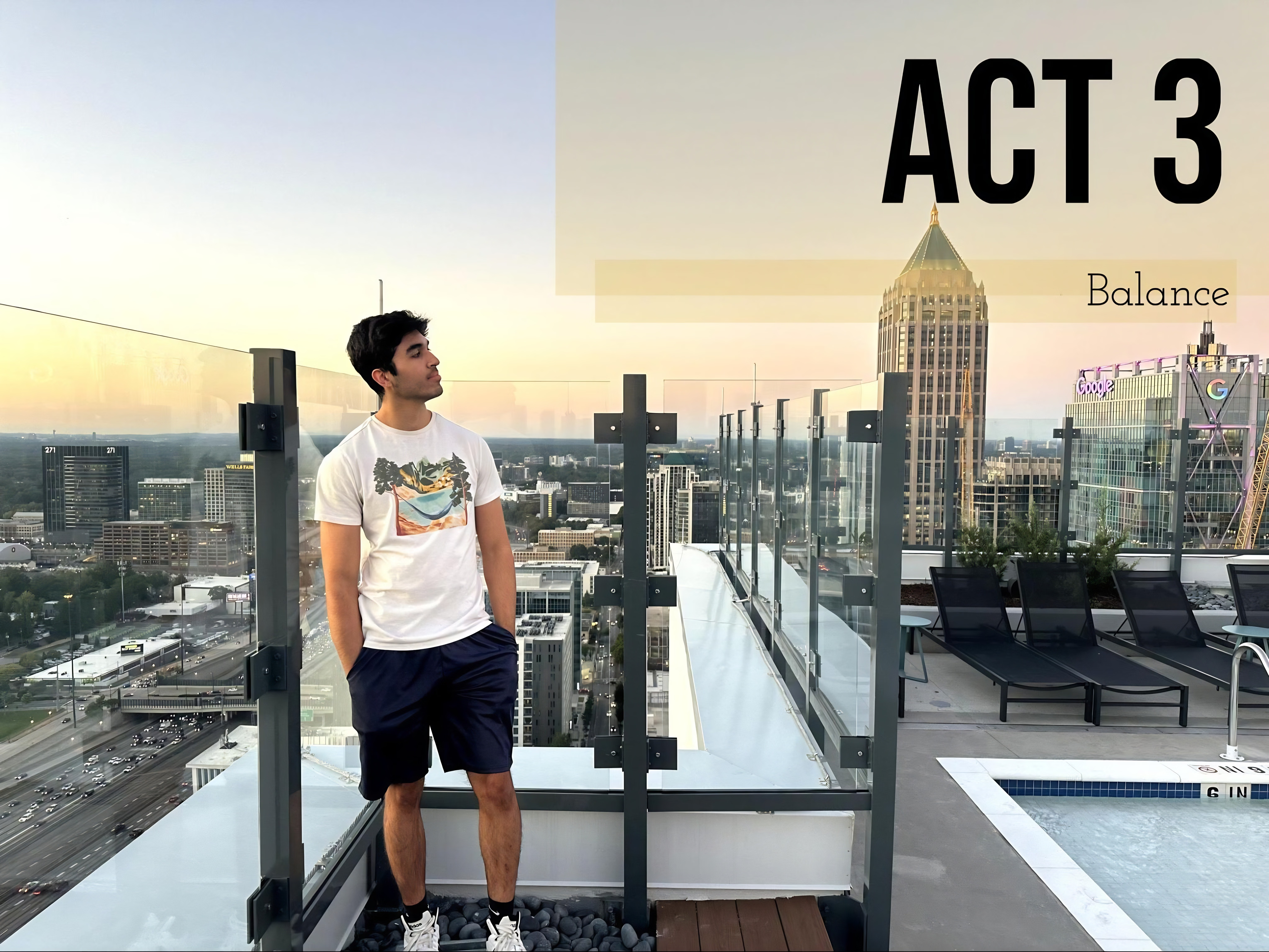

Where are you?

Nov '25 — Present

Prologue

""

Where am I?

Aug '25 — Oct '25

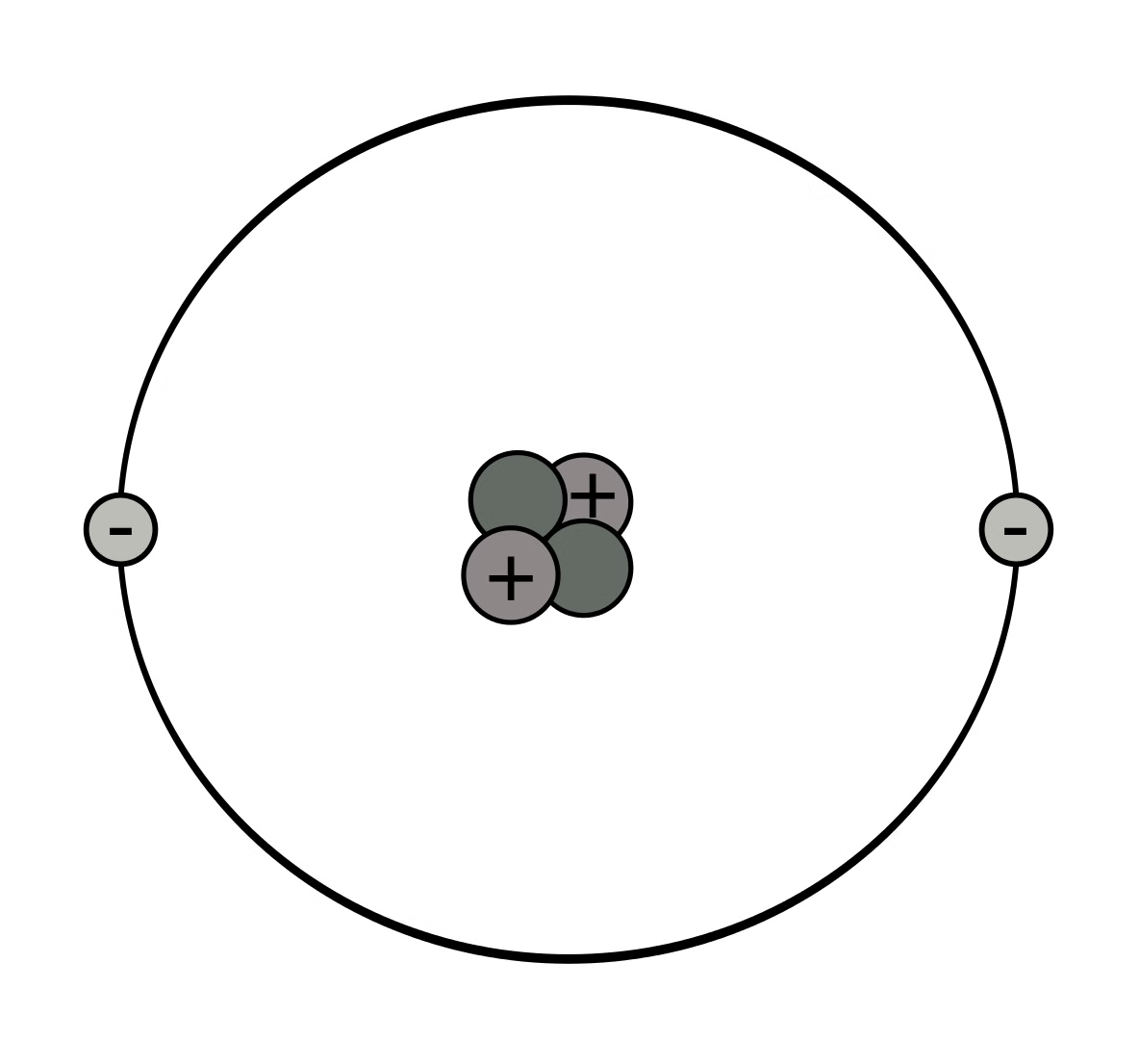

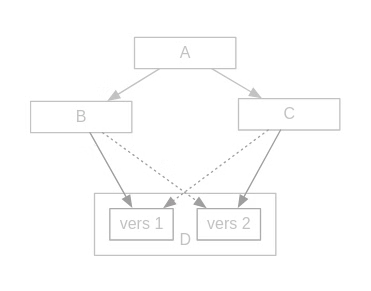

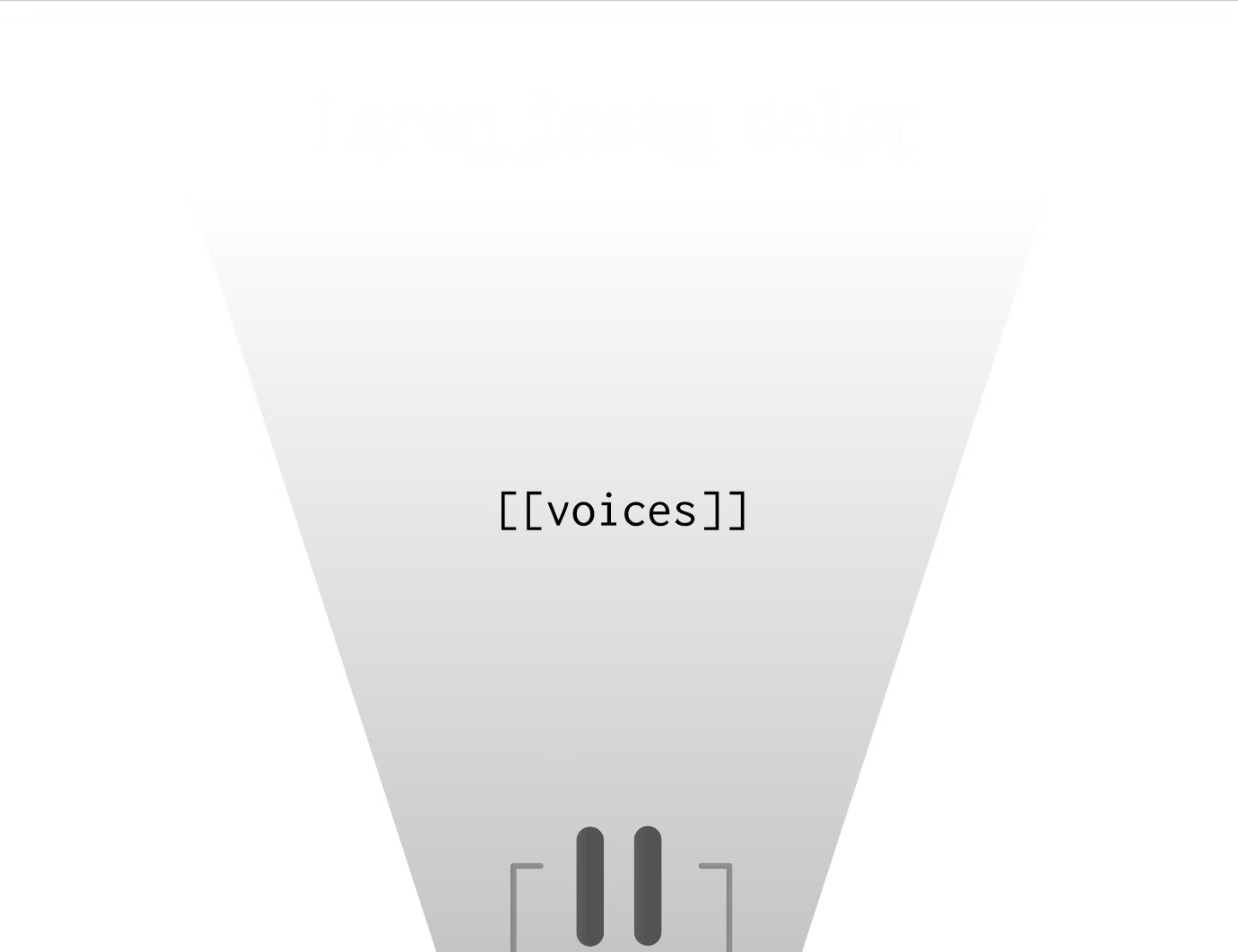

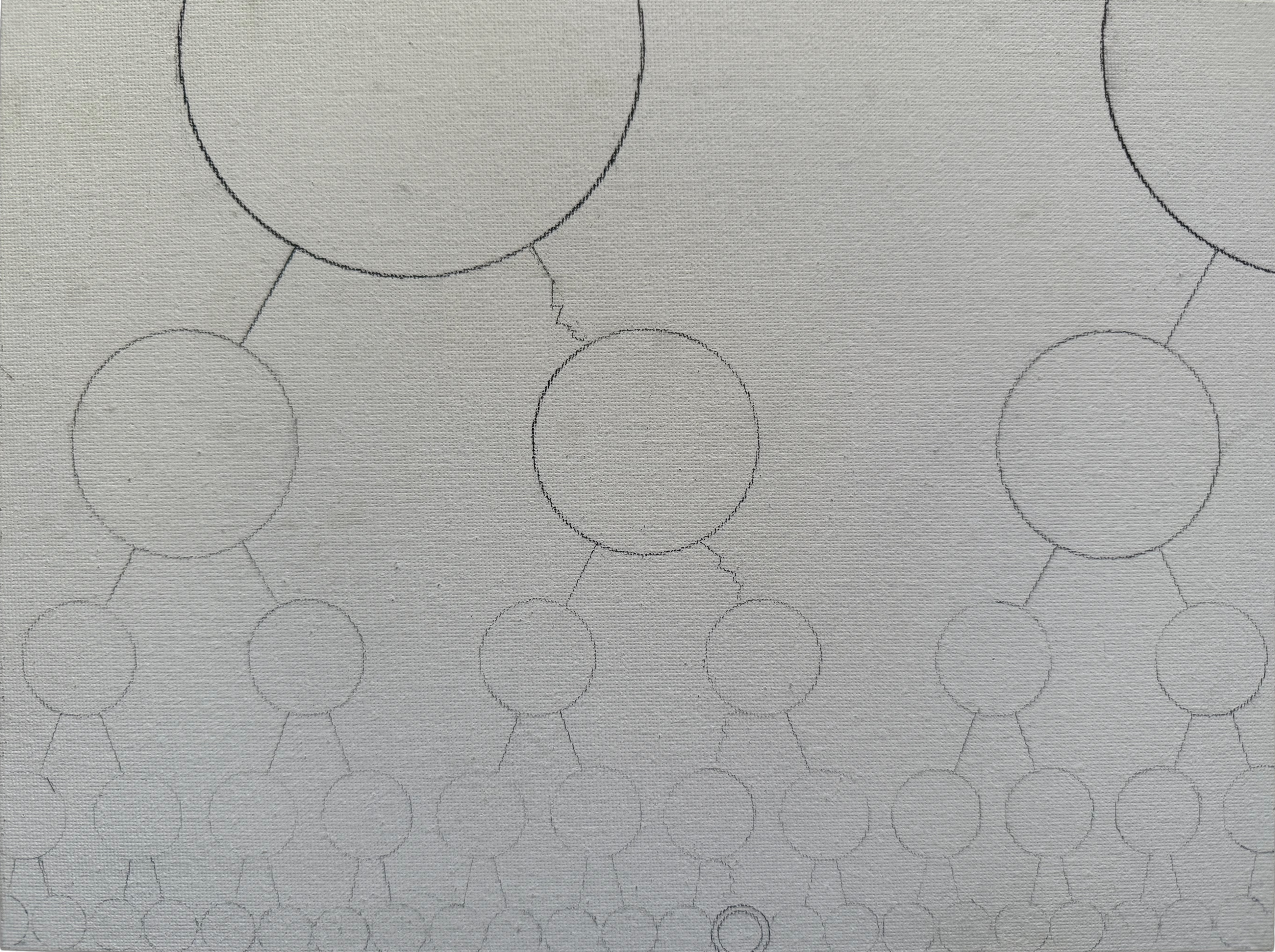

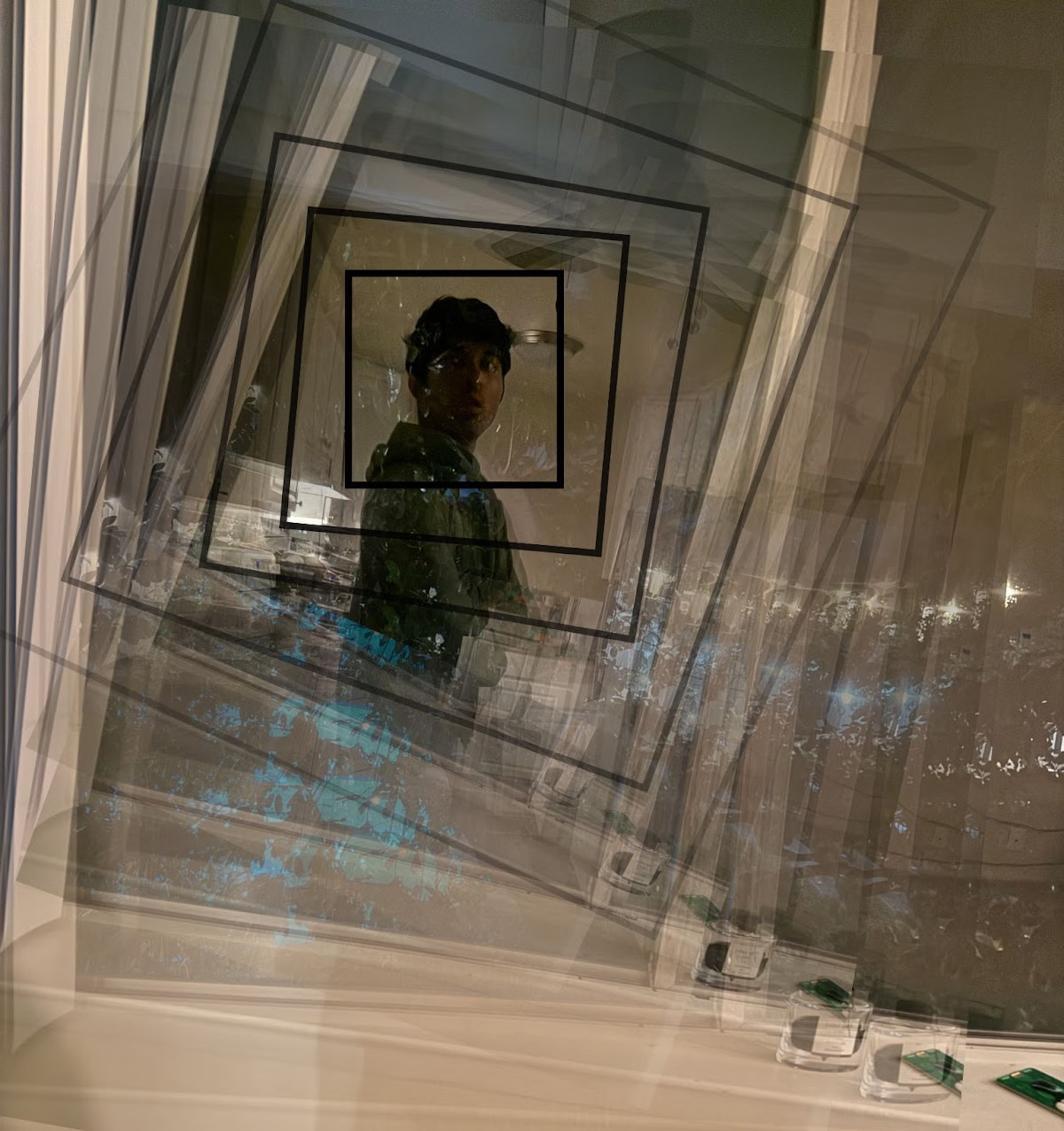

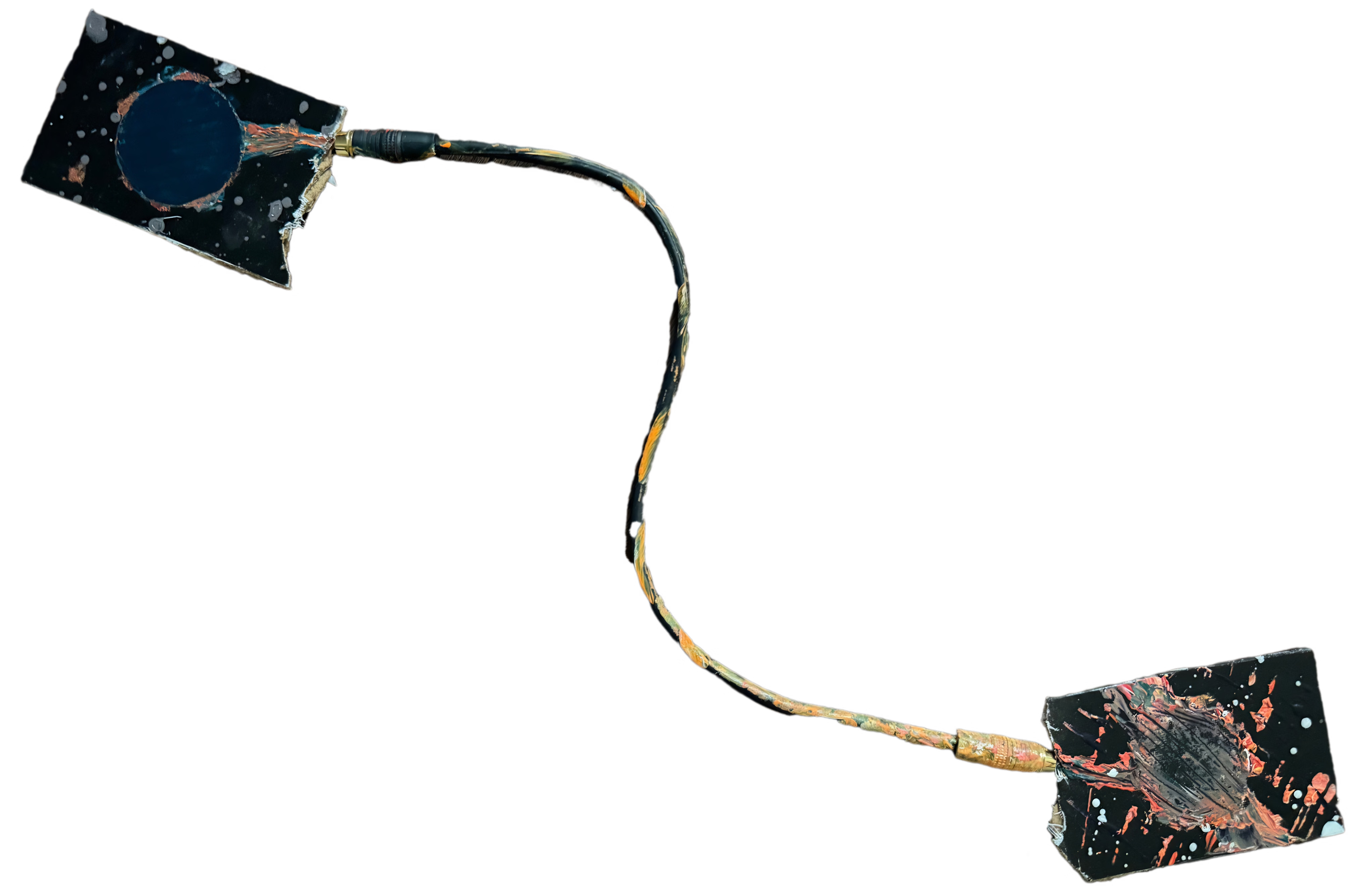

How many am I?

Jun '25 — Aug '25

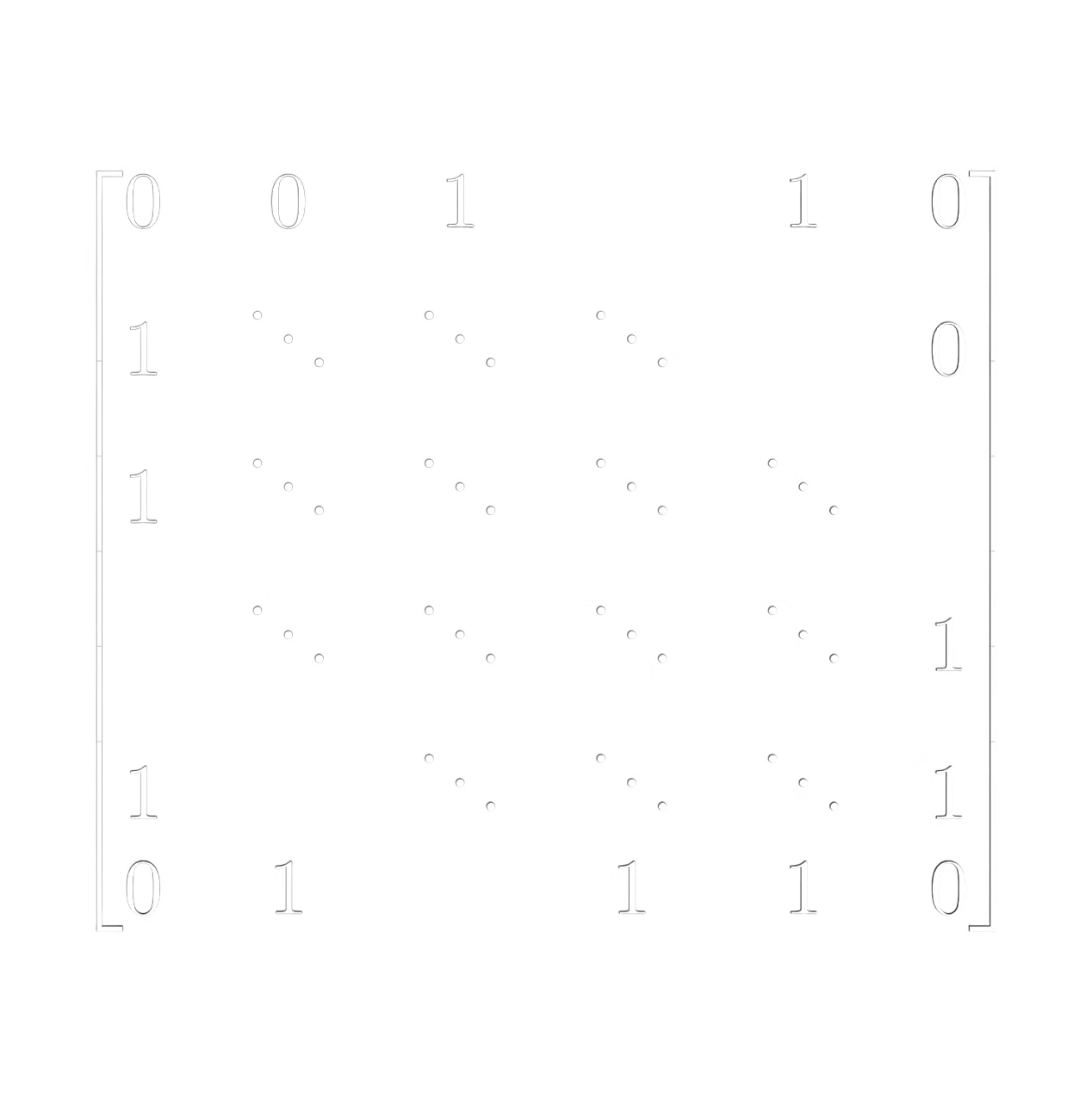

Prologue

""

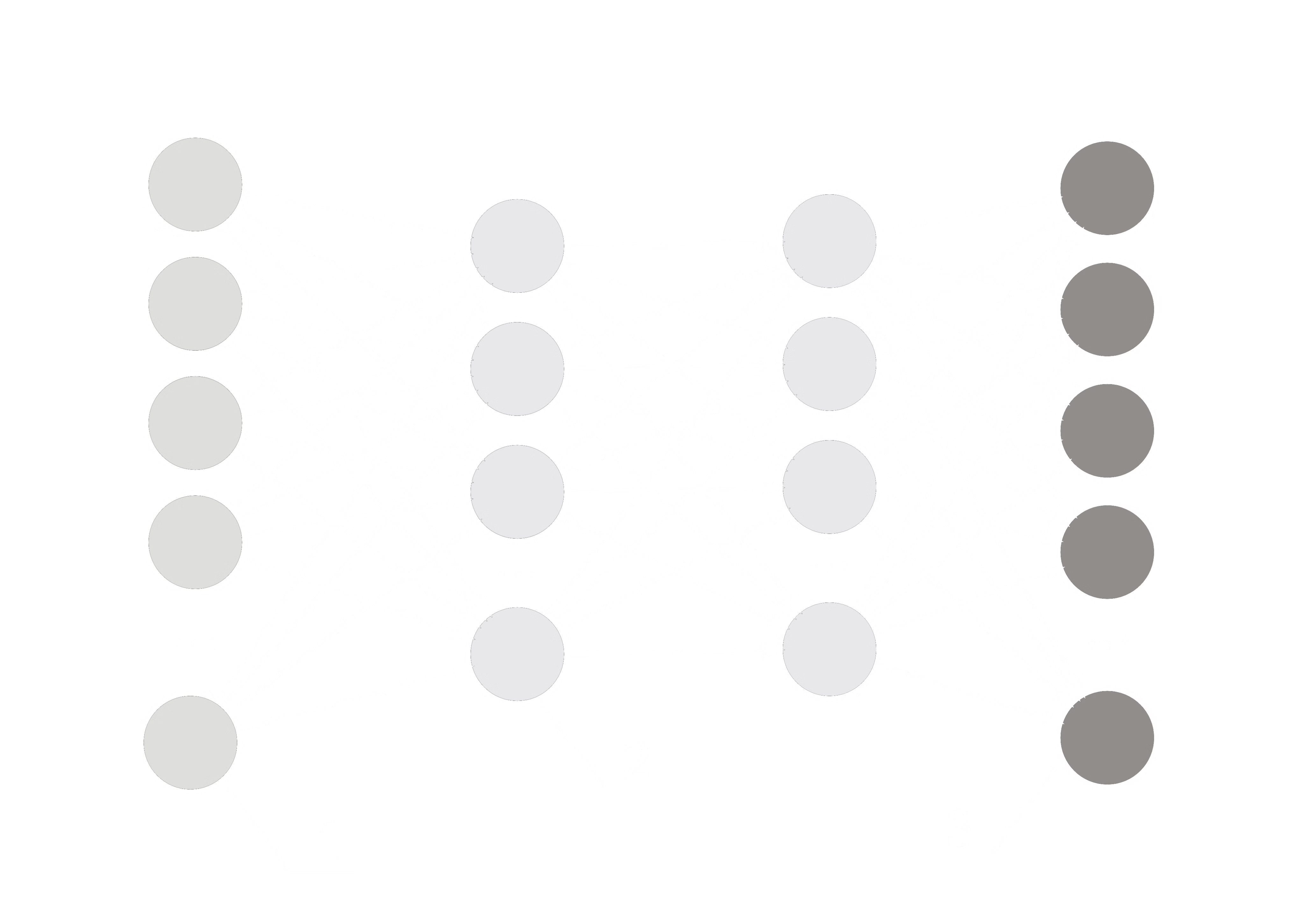

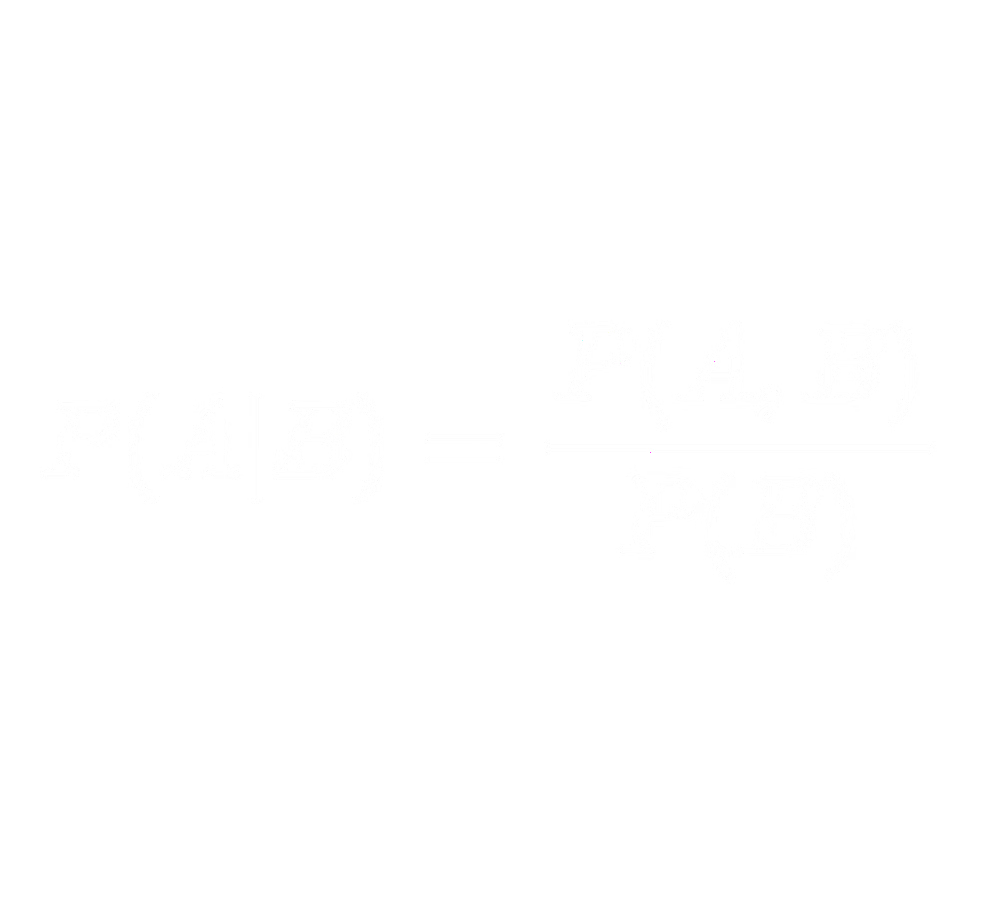

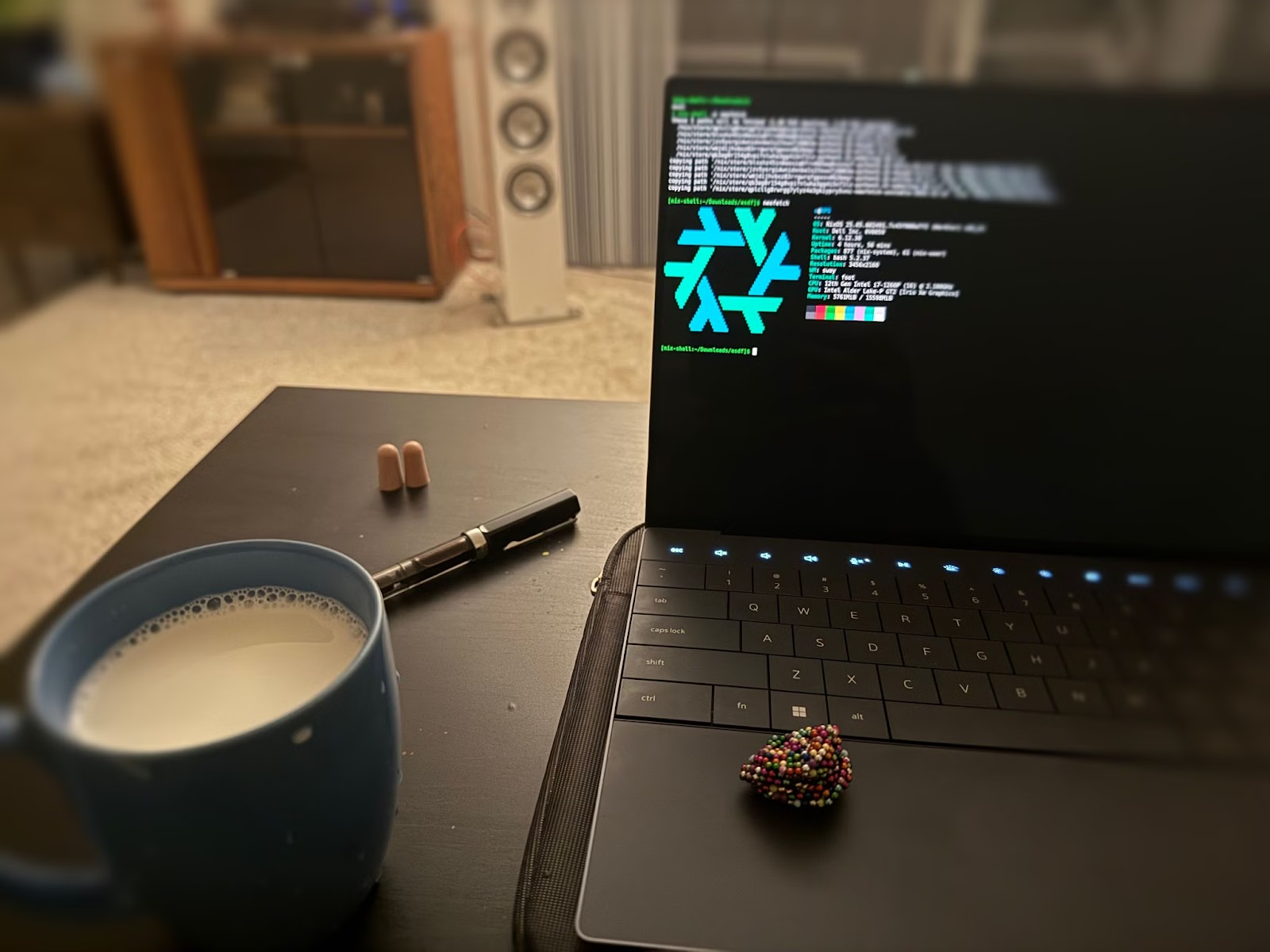

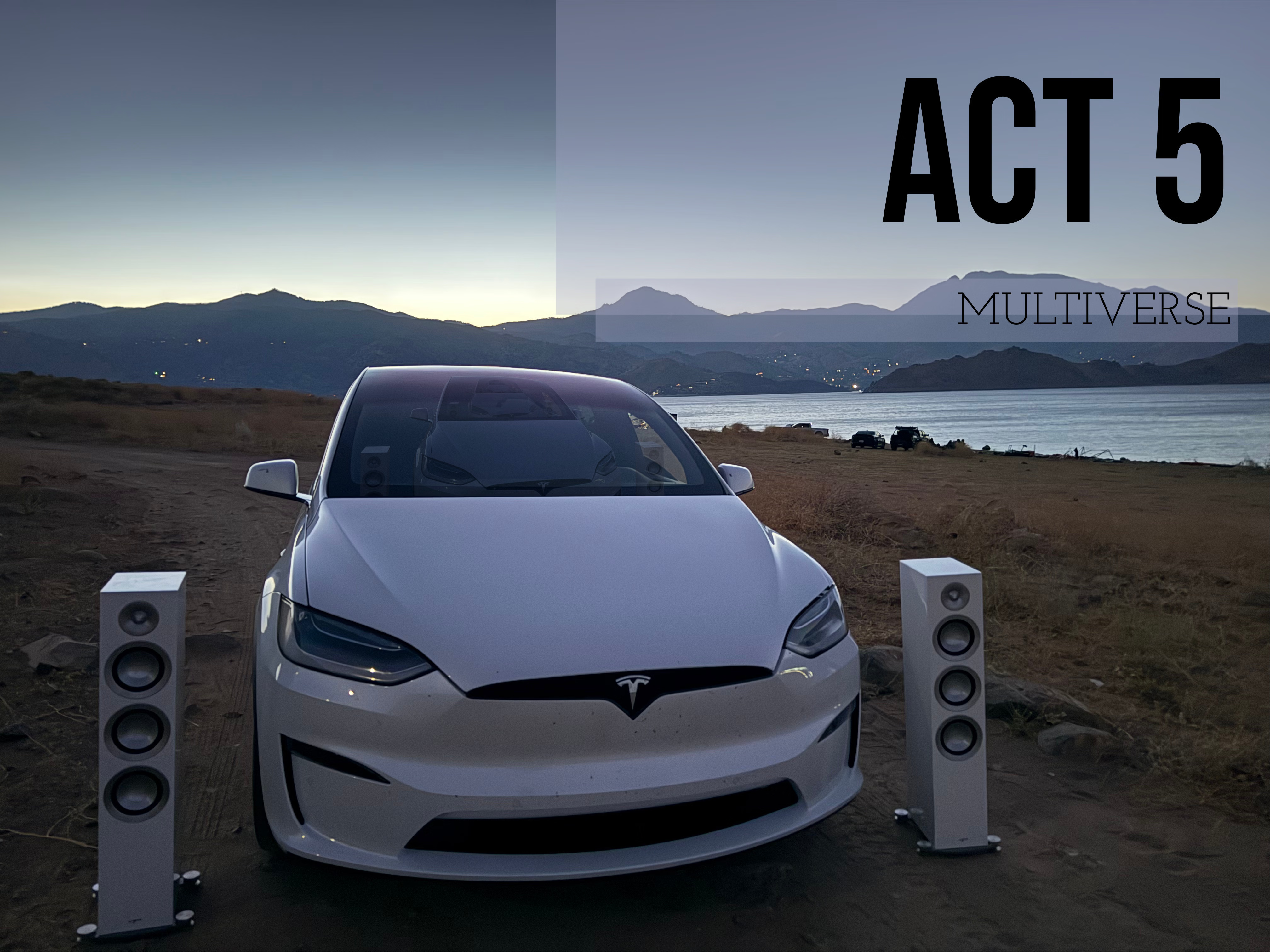

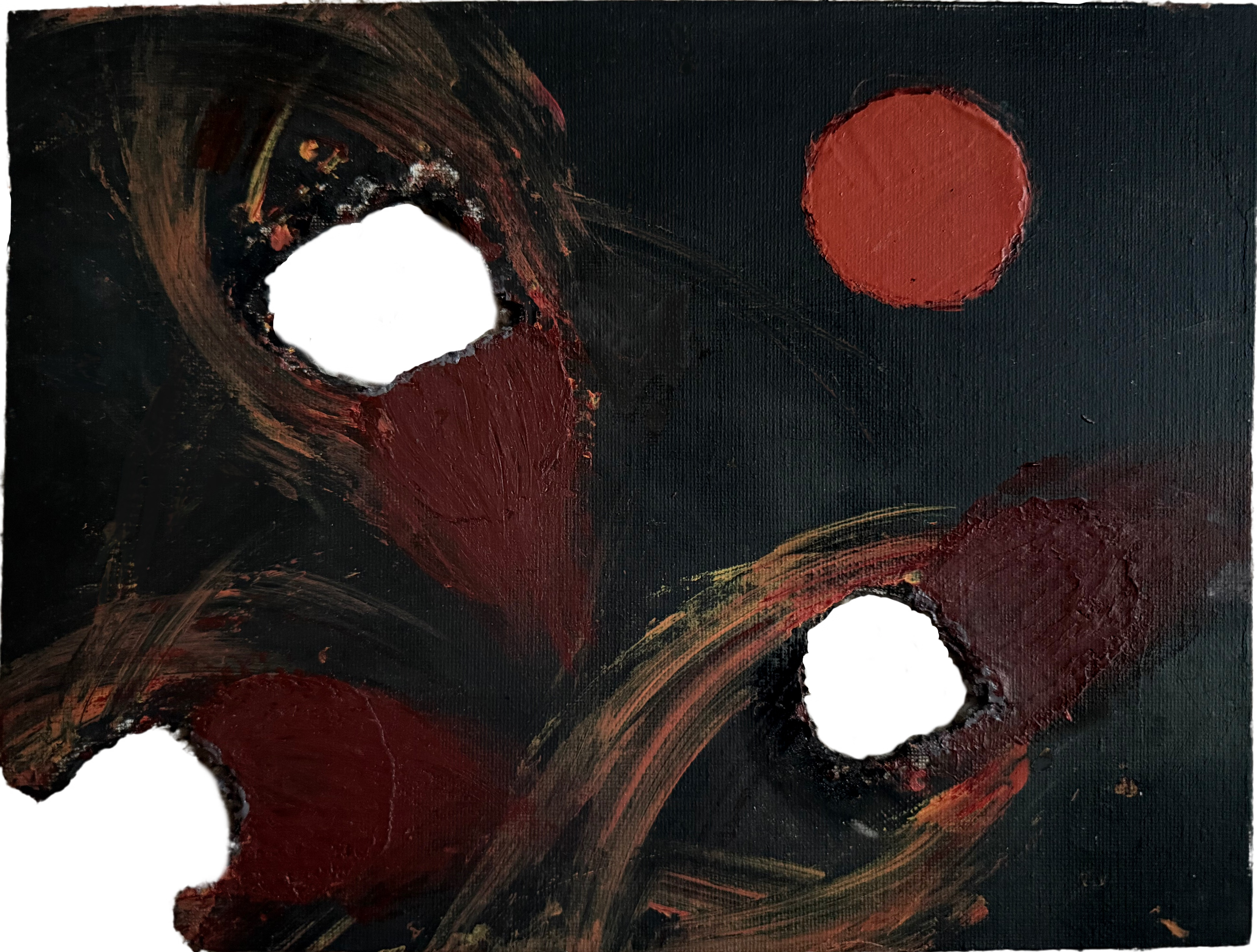

What am I?

Mar '25 — Jun '25

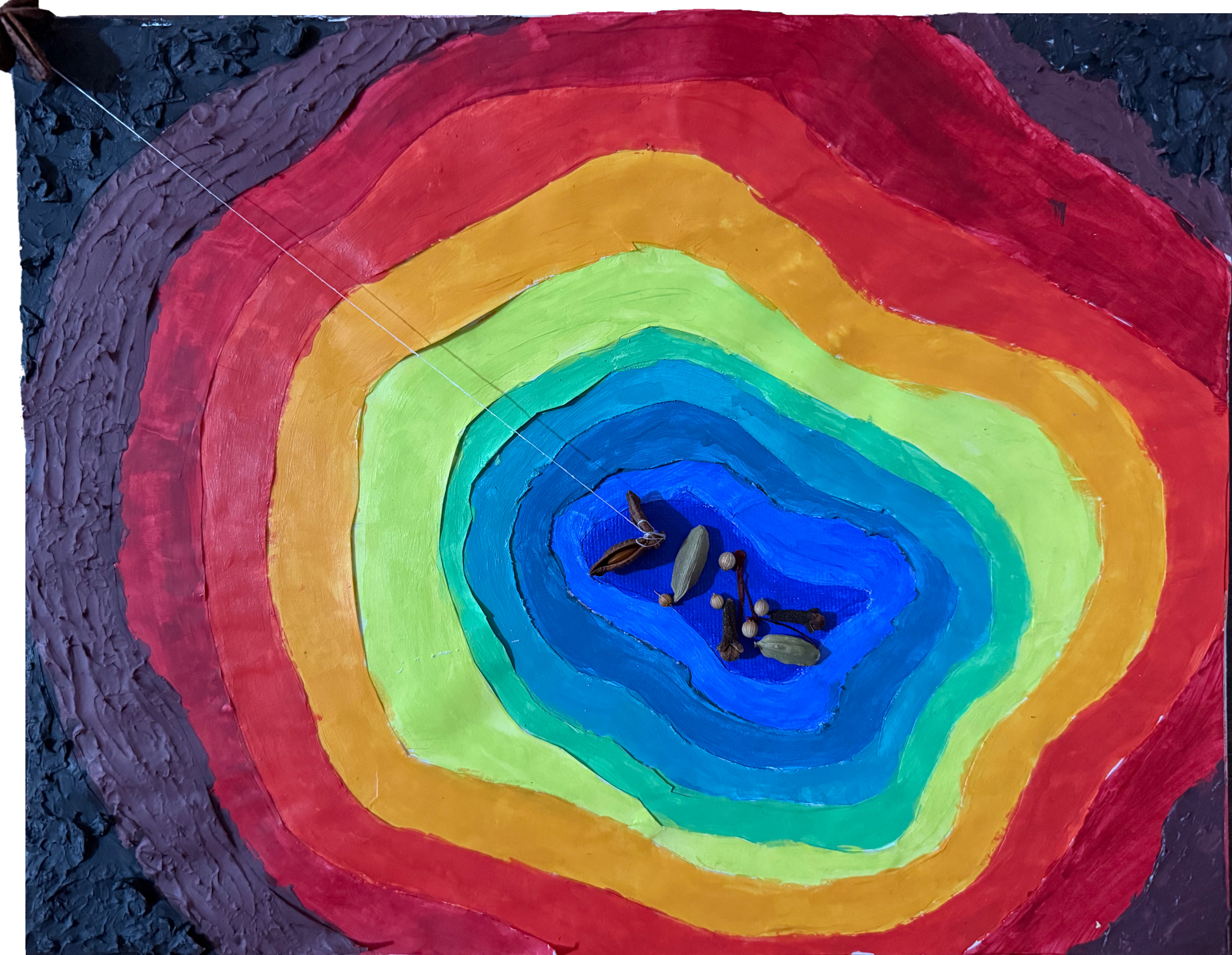

Prologue

""

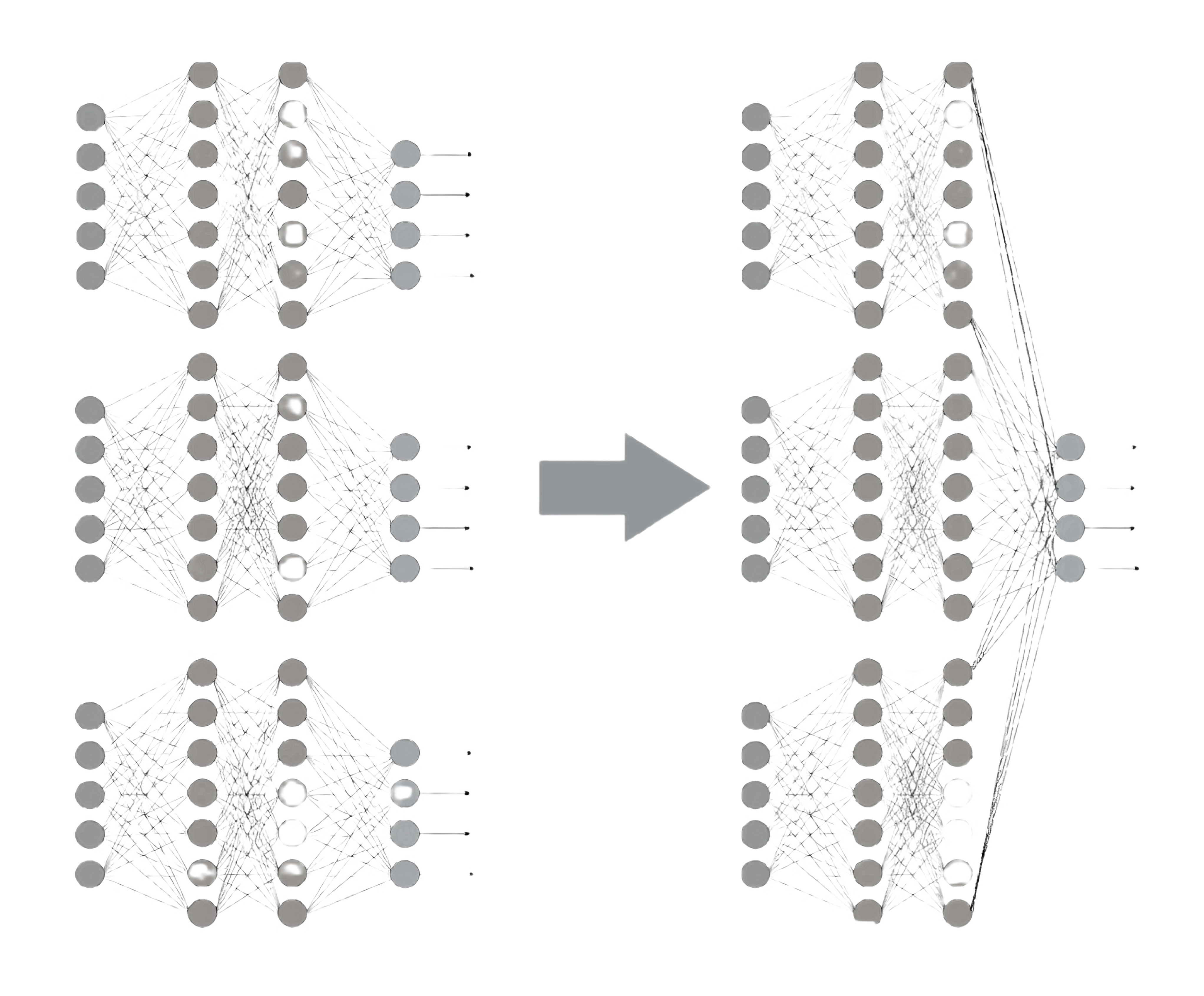

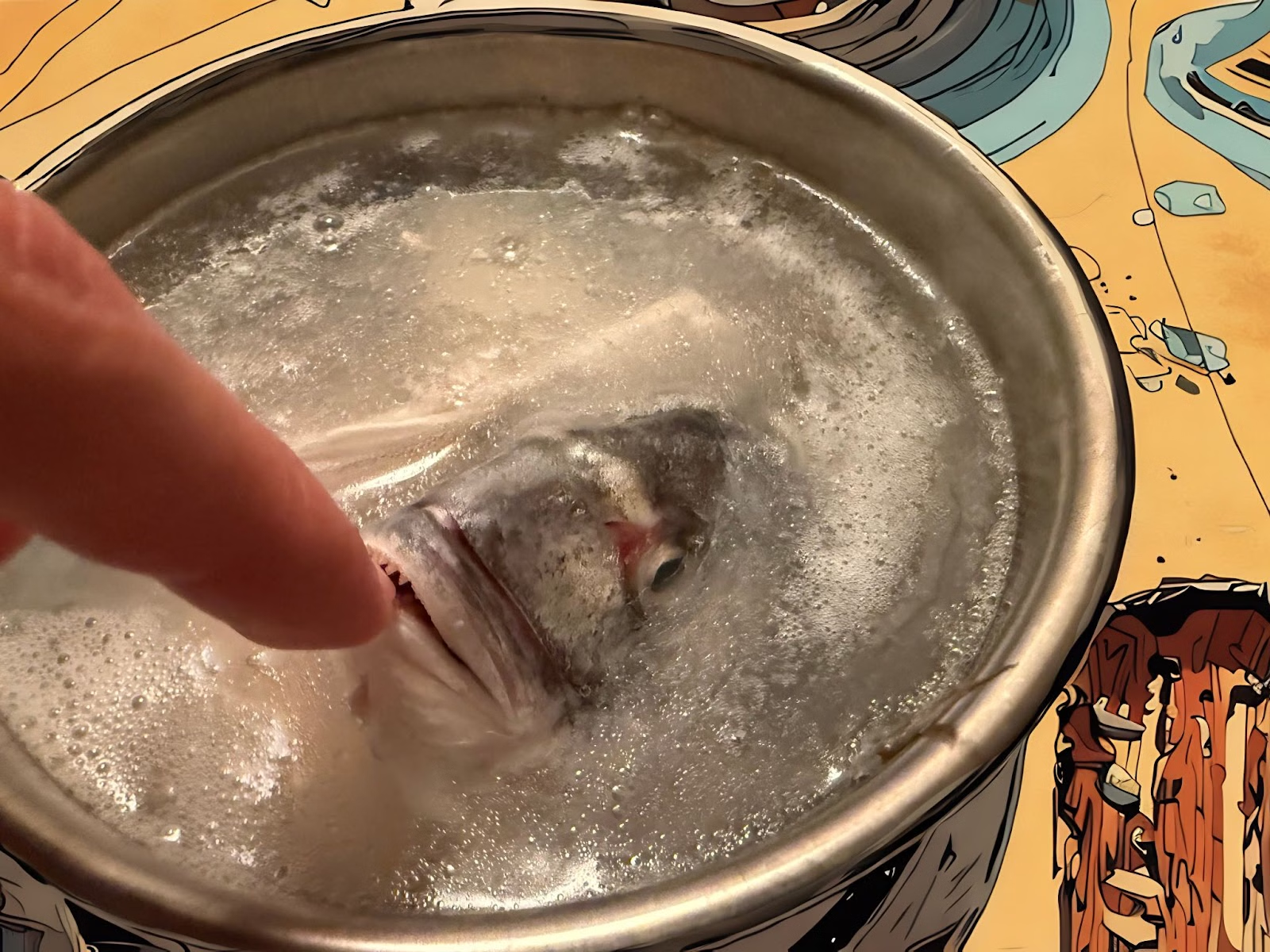

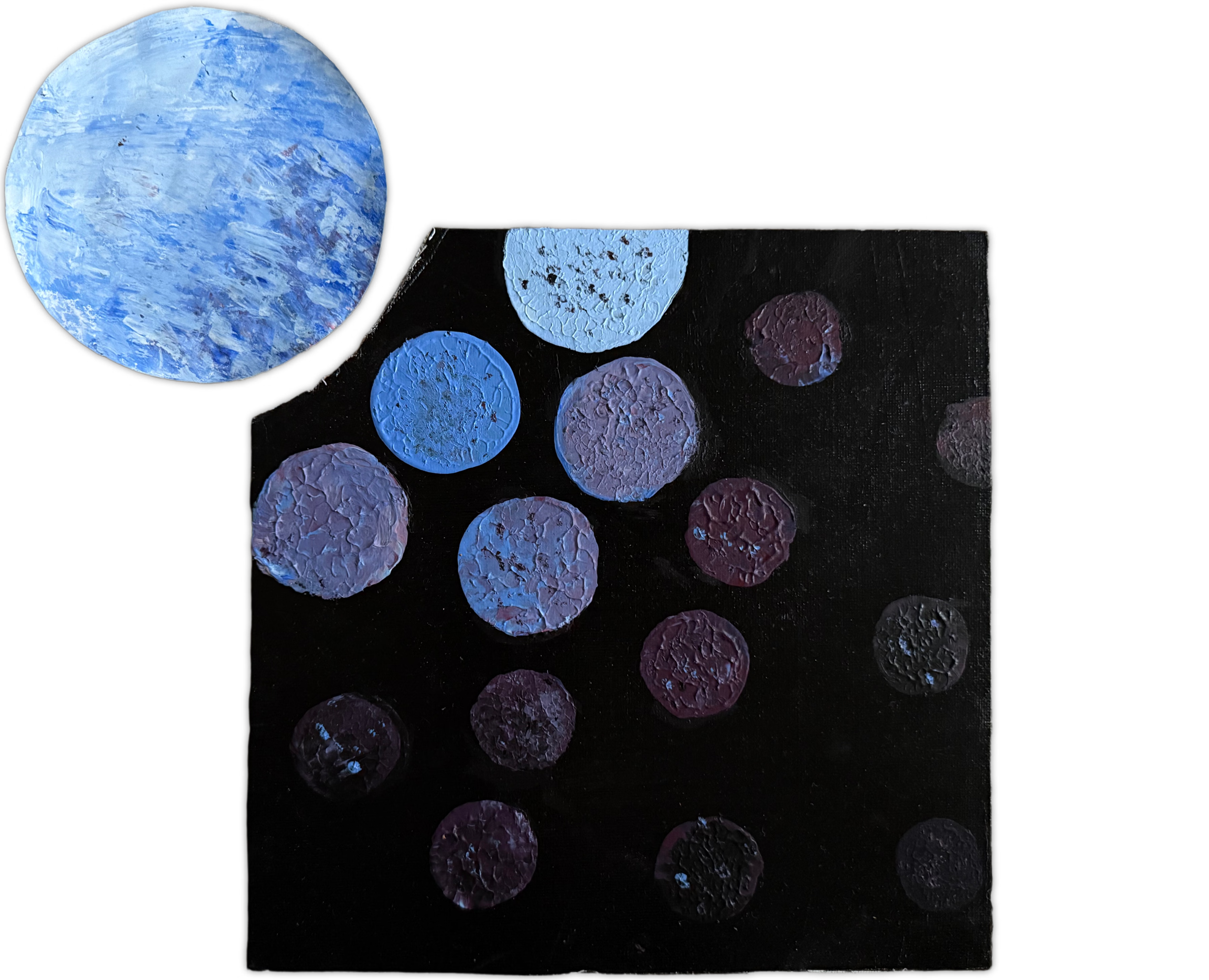

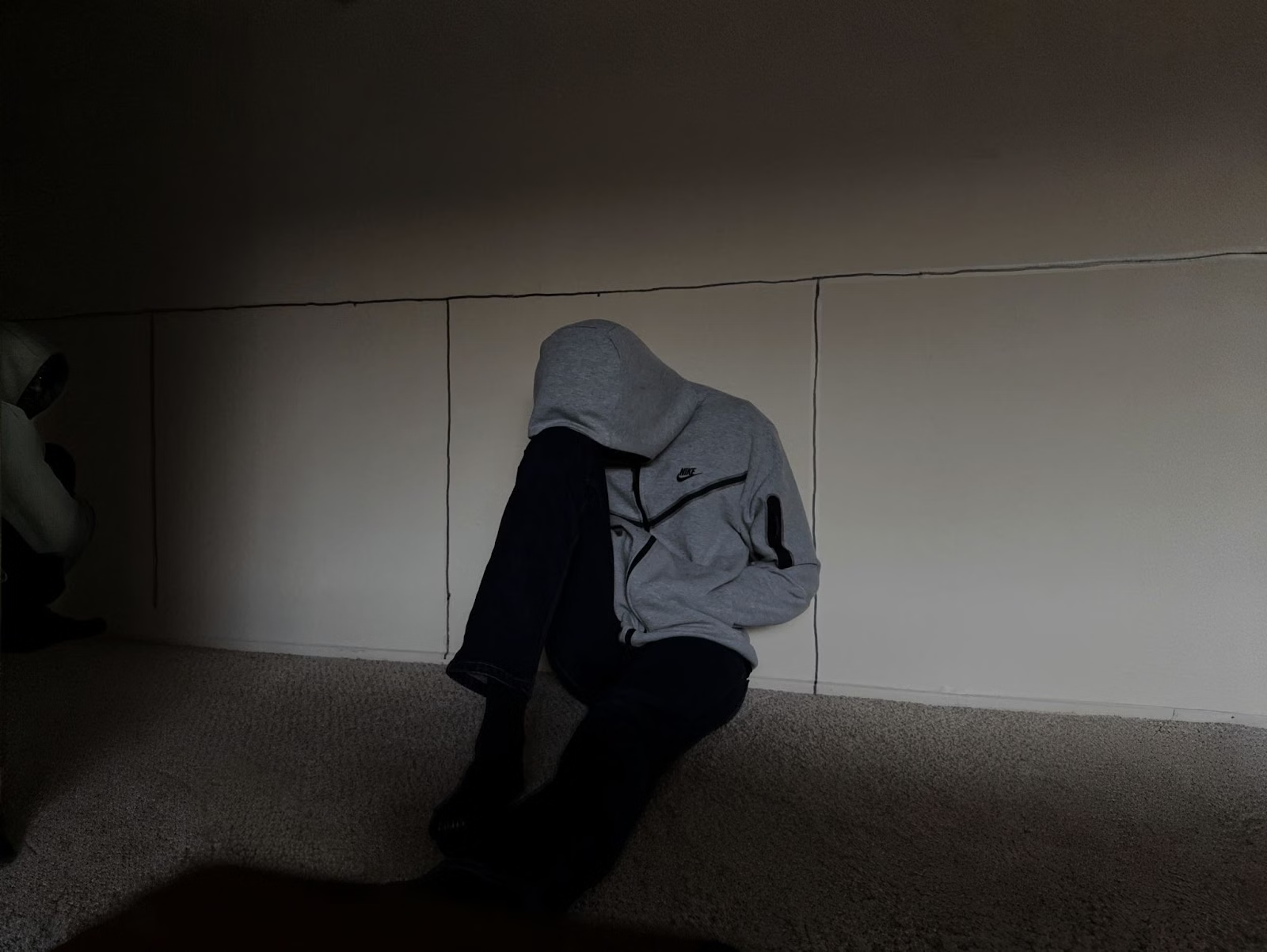

Who am I becoming?

Oct '24 — Dec '24

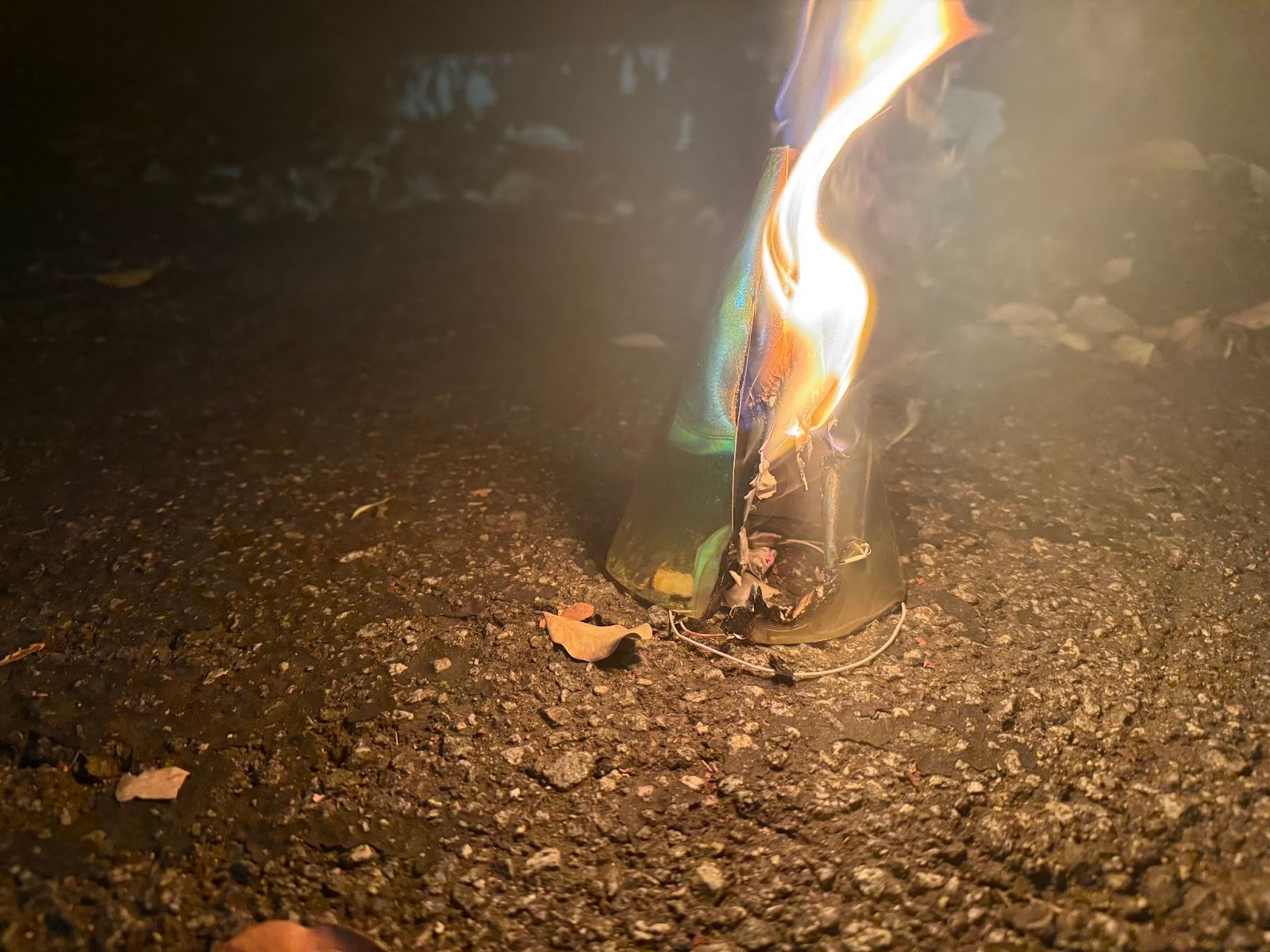

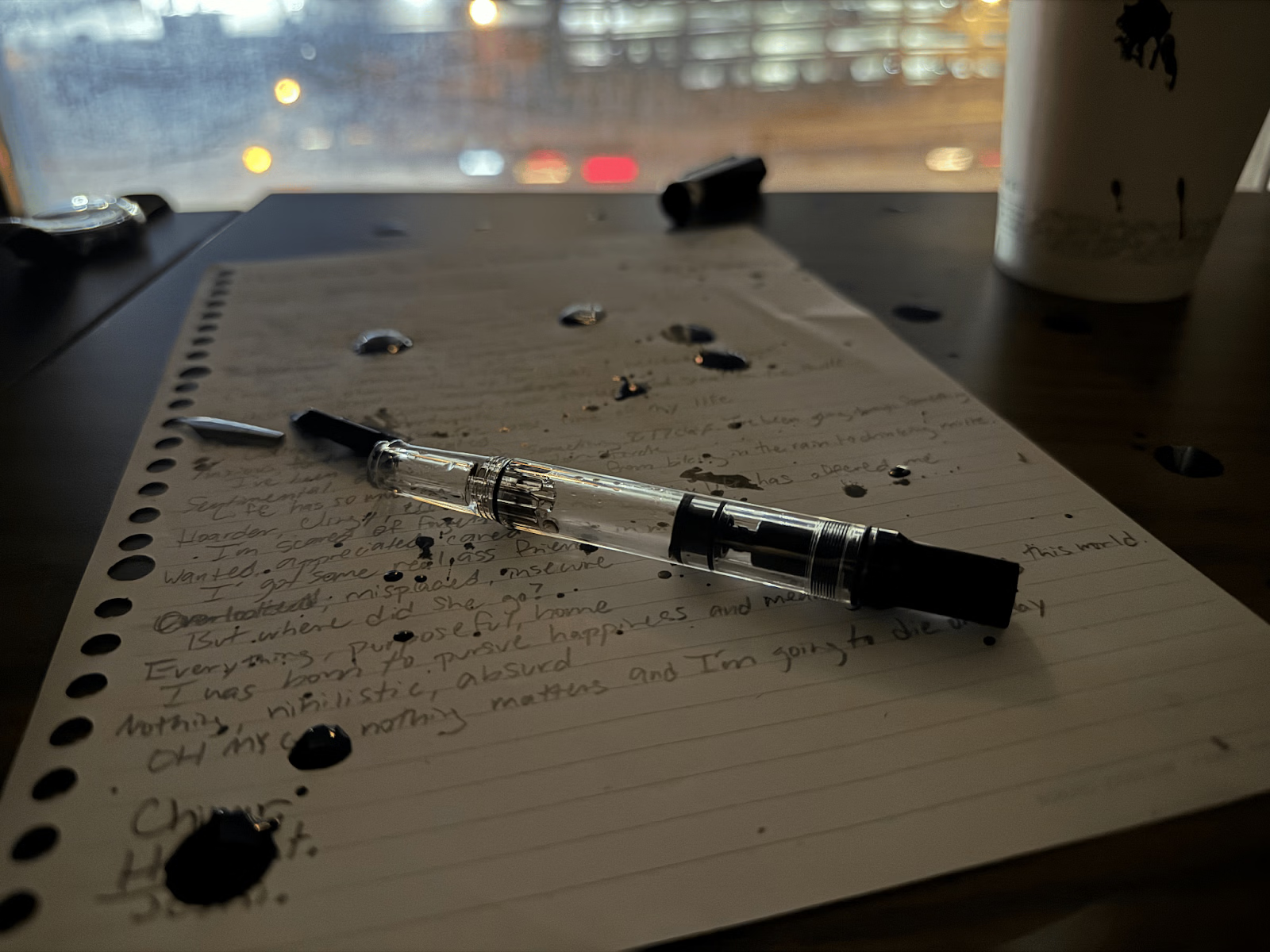

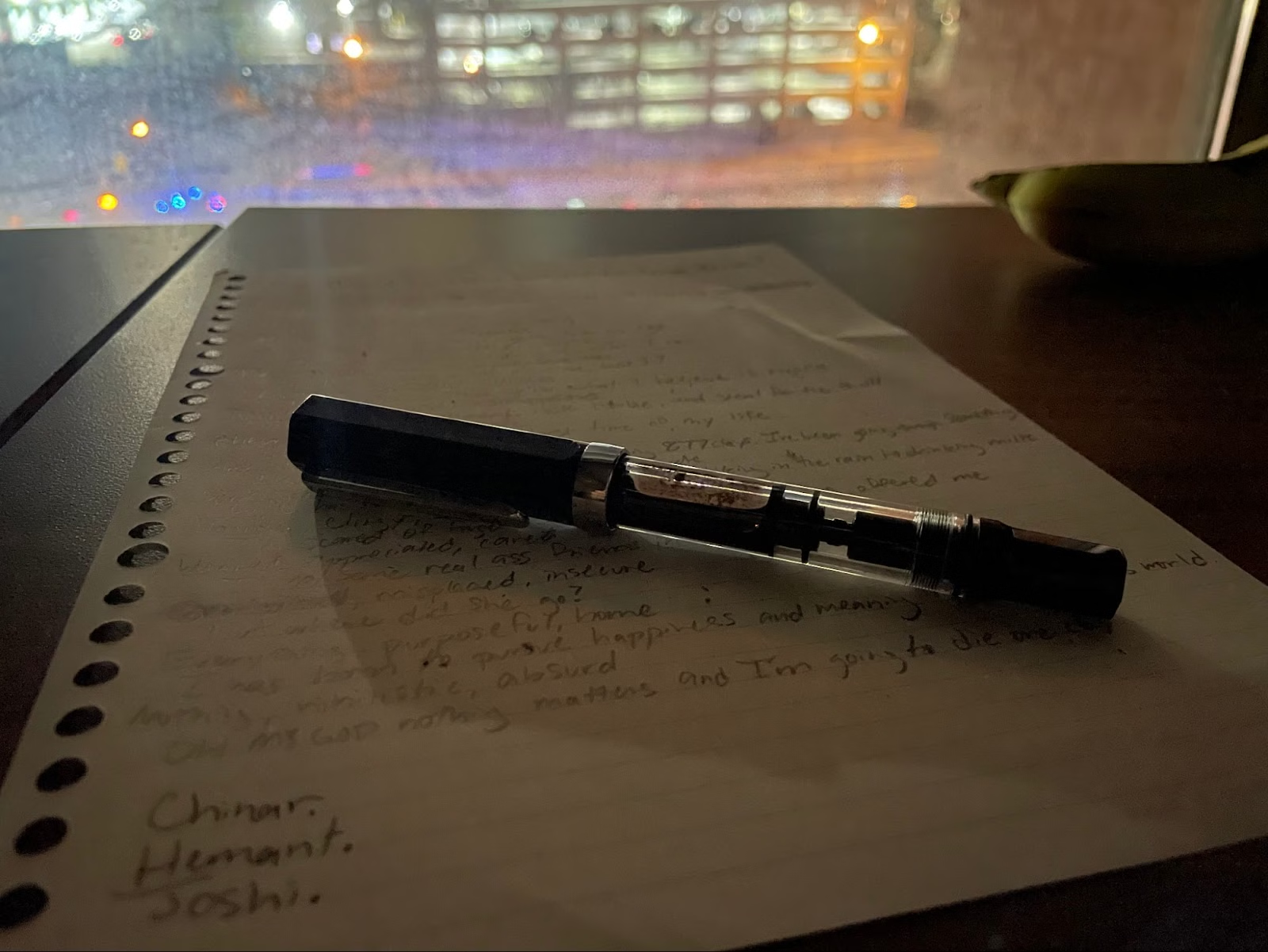

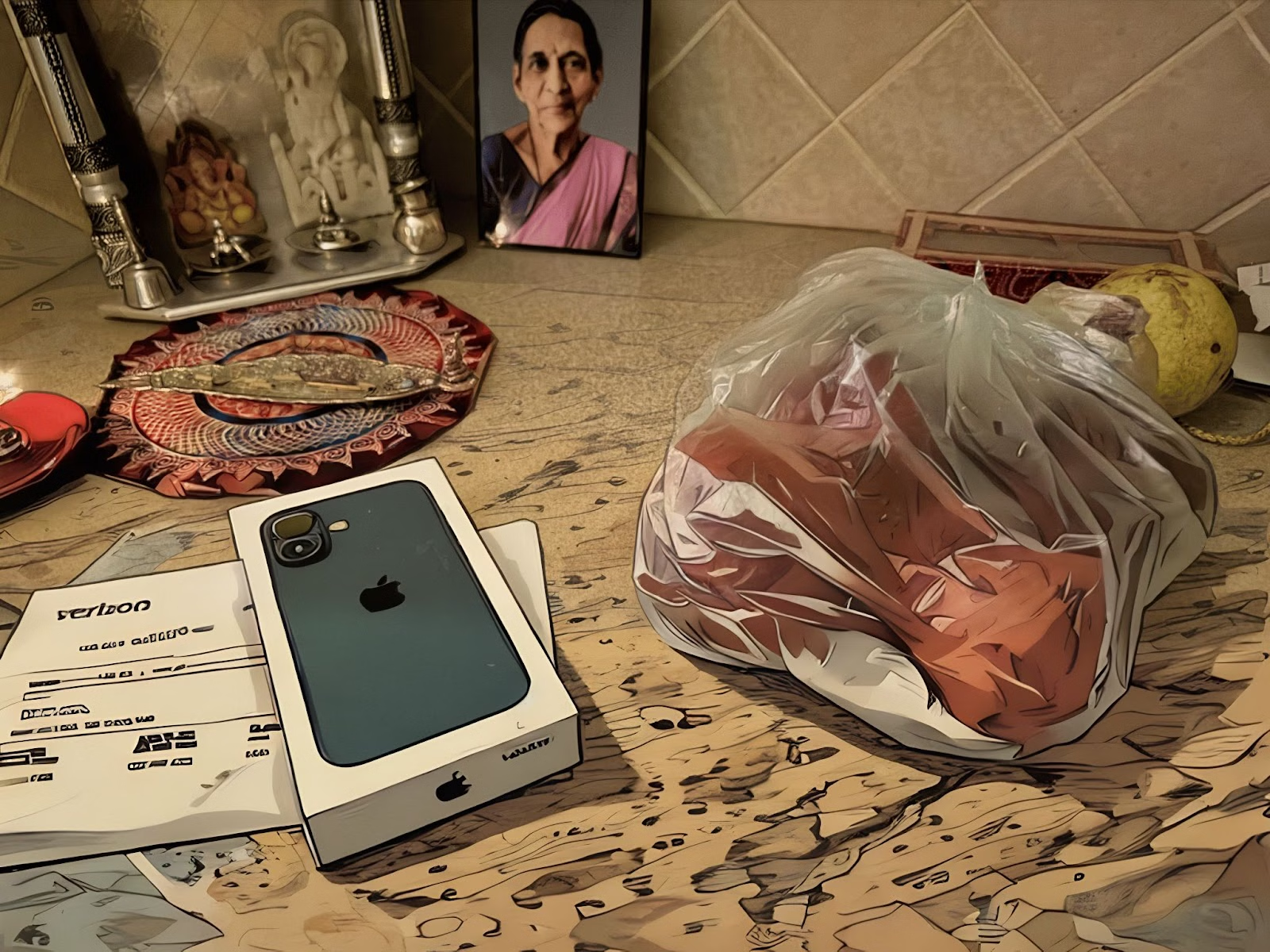

Prologue

""

""

Epilogue

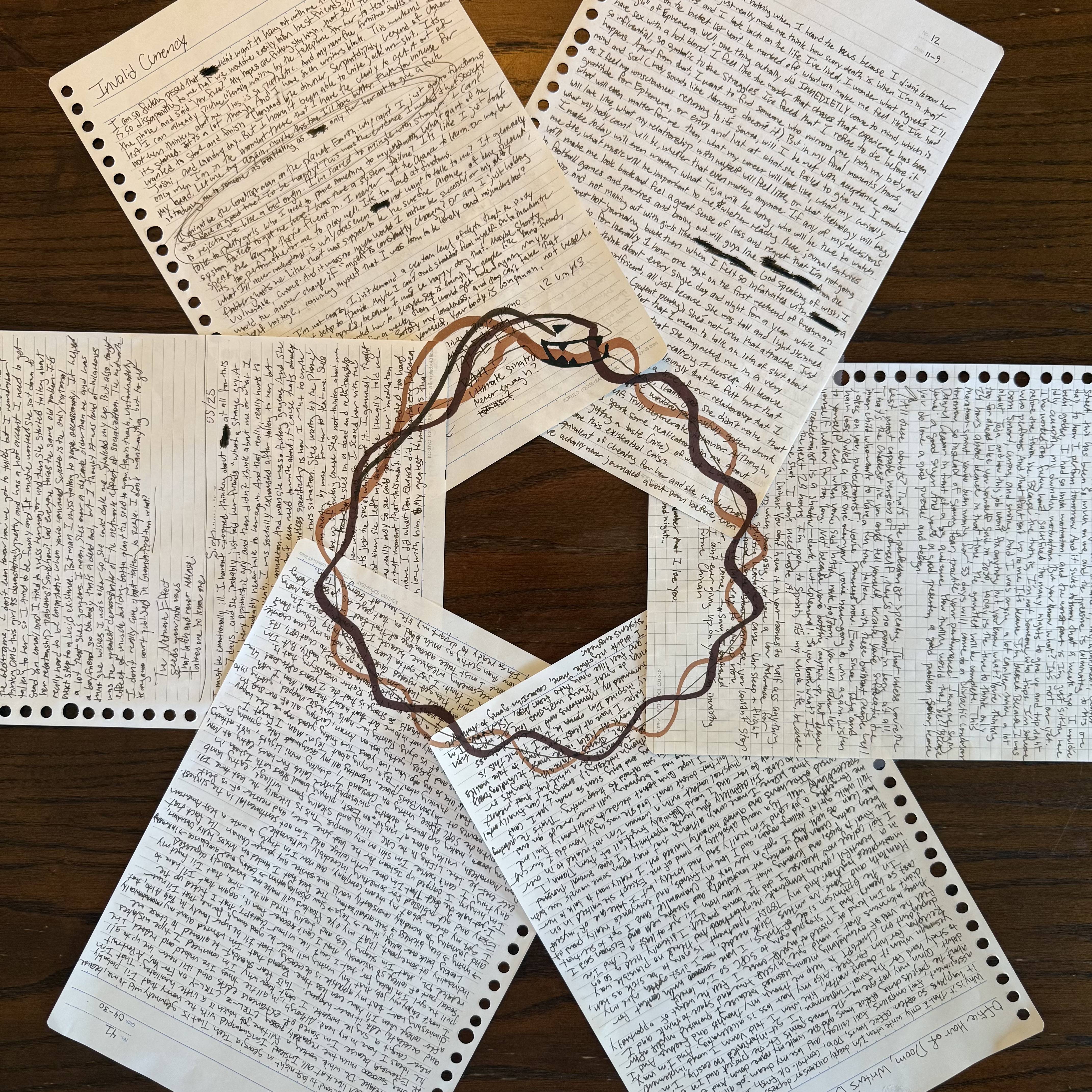

Who am I?

Aug '24 — Oct '24

""

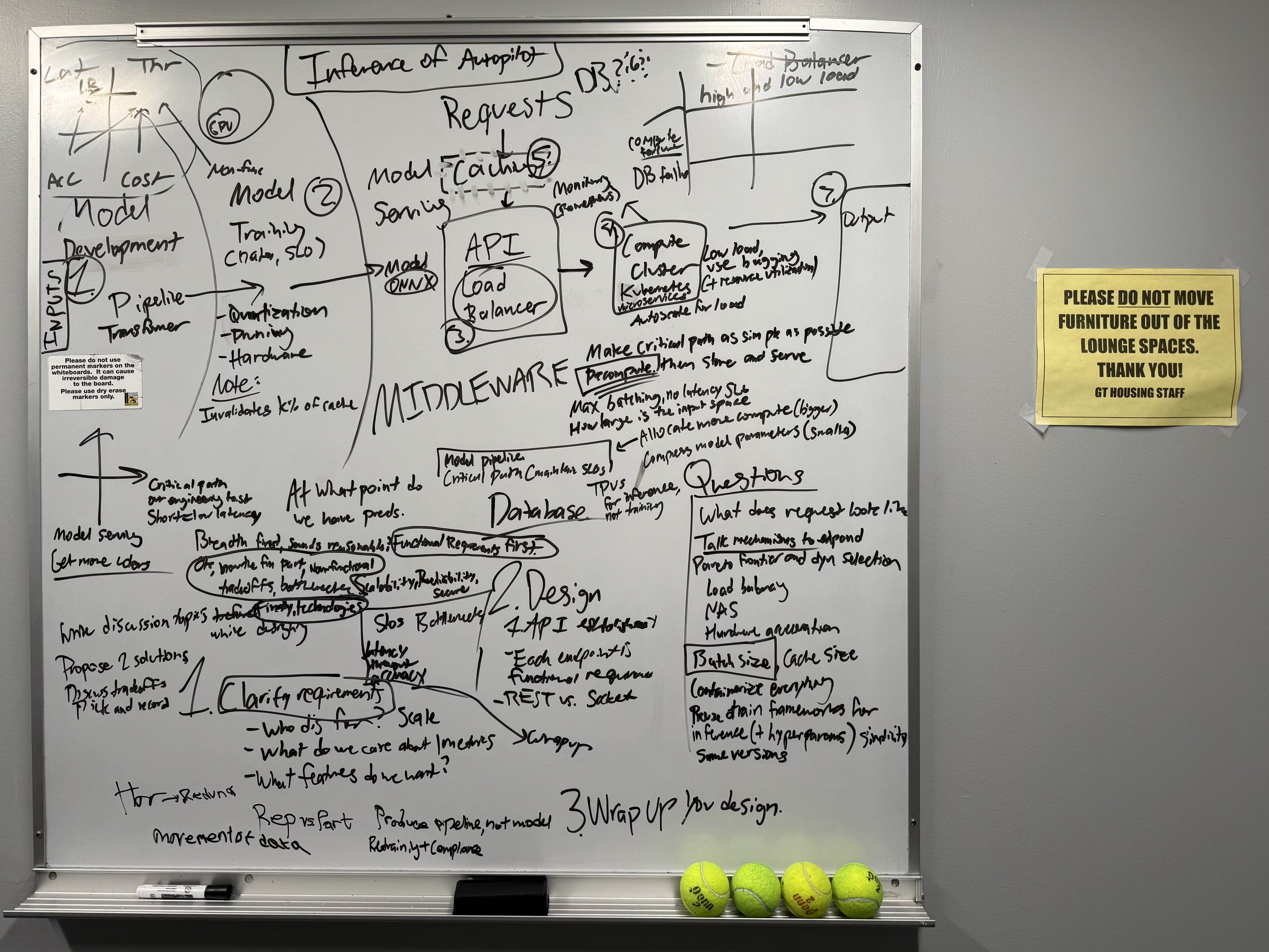

What have I done?

Jun '24 — Aug '25

What can be done?

May '04 — Dec '24