Front End

About the learned Joshi's parsing, exploring

fine-tuning and formalization

Middle End

About the iterative Joshi's optimizations, exploring

intent and isomorphisms

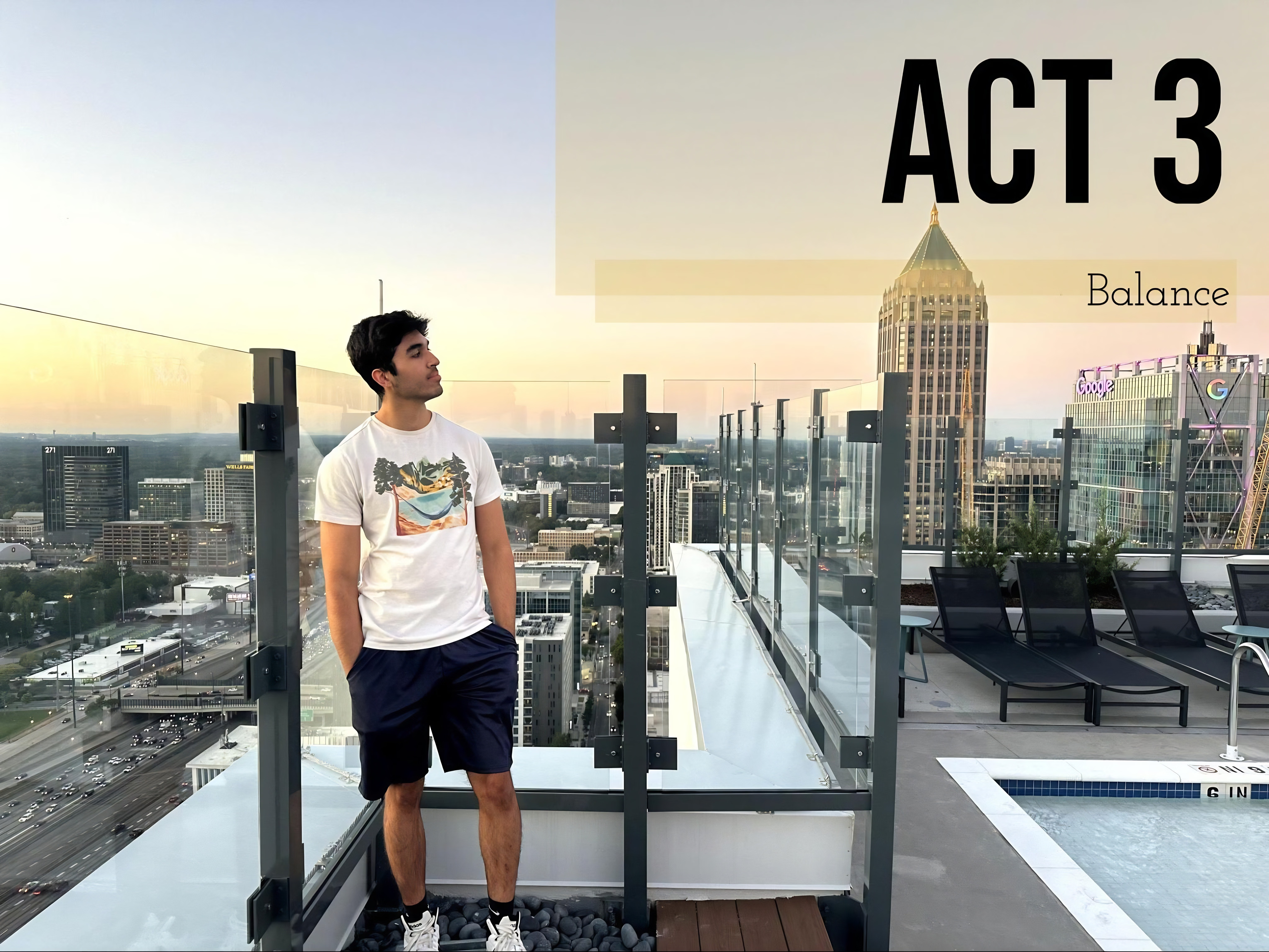

Back End

About the formal Joshi's emissions, exploring

abstraction and ASTs

Phenomenalist

About why the 5-modal Joshi is the only one in his head, exploring

solitude and solipsism

Berkeley: Reprise

In which the graduated Joshi revisits his wayward words, exploring

pretention and purpose

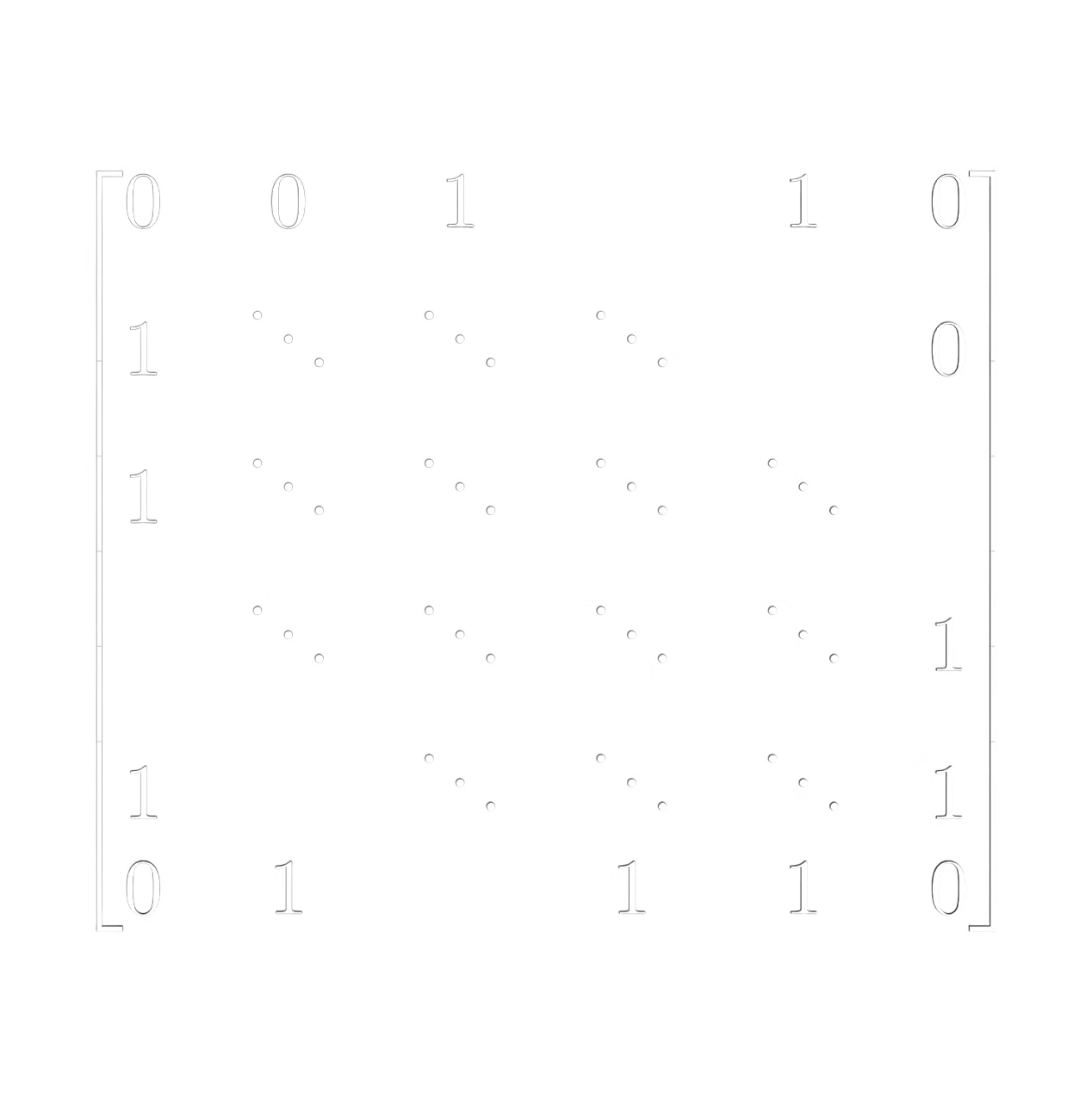

P ≟ NP

About why the quiet Joshi might never find the right words in polytime, exploring

decision and decidability

SELECT * FROM things ORDER BY love LIMIT 5

About that which the opinionated Joshi’s can’t live without, exploring

Symbols and storytime

Again.

In which the constipated Joshi explodes while cooking, exploring

metaphysics and metametaphysics

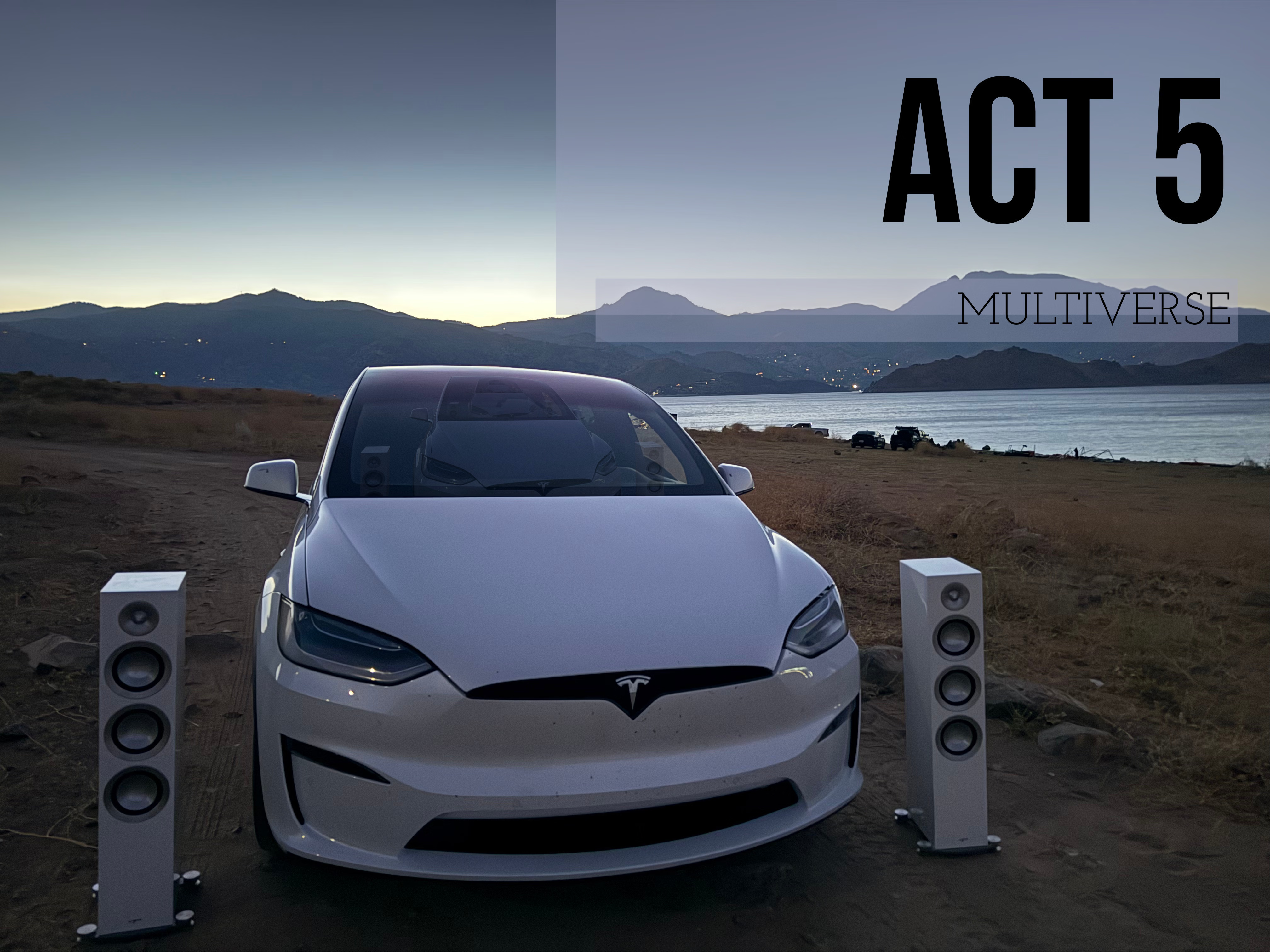

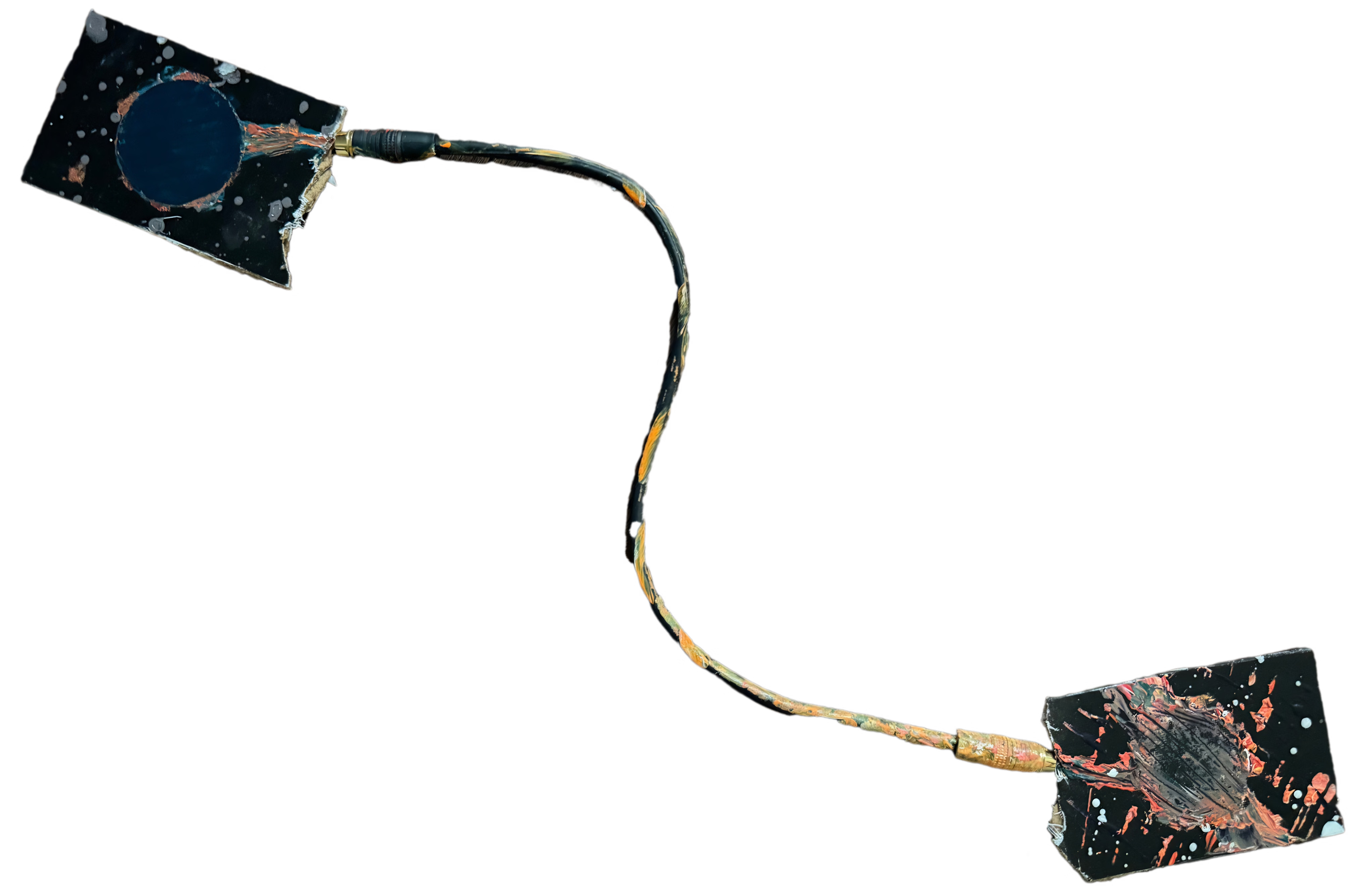

Dissonance Machine

In which the improvising Joshi takes the scenic route, exploring

FSD and floaters

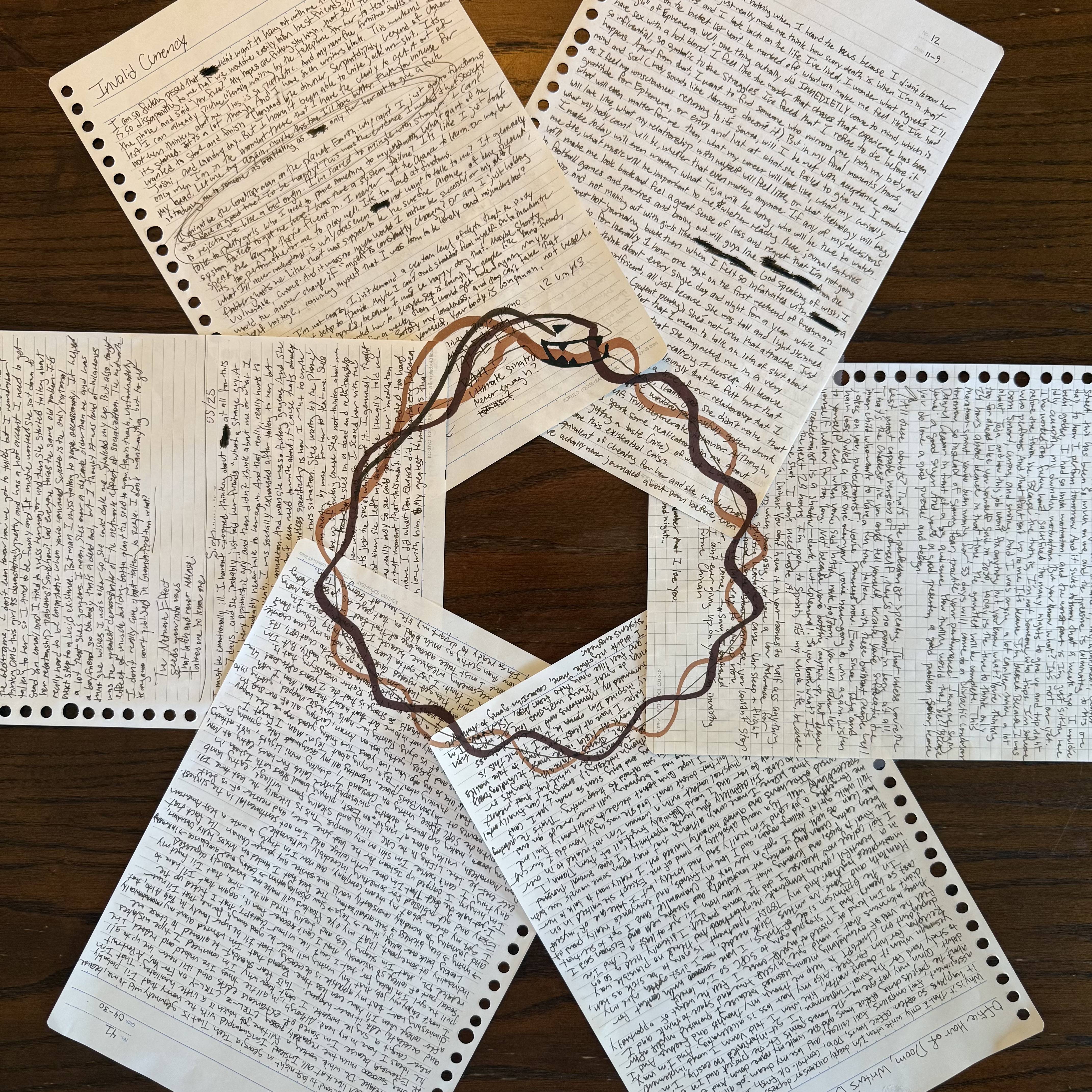

Ouroboros

In which the broken-record Joshi says the same thing over and over and over and

over and over

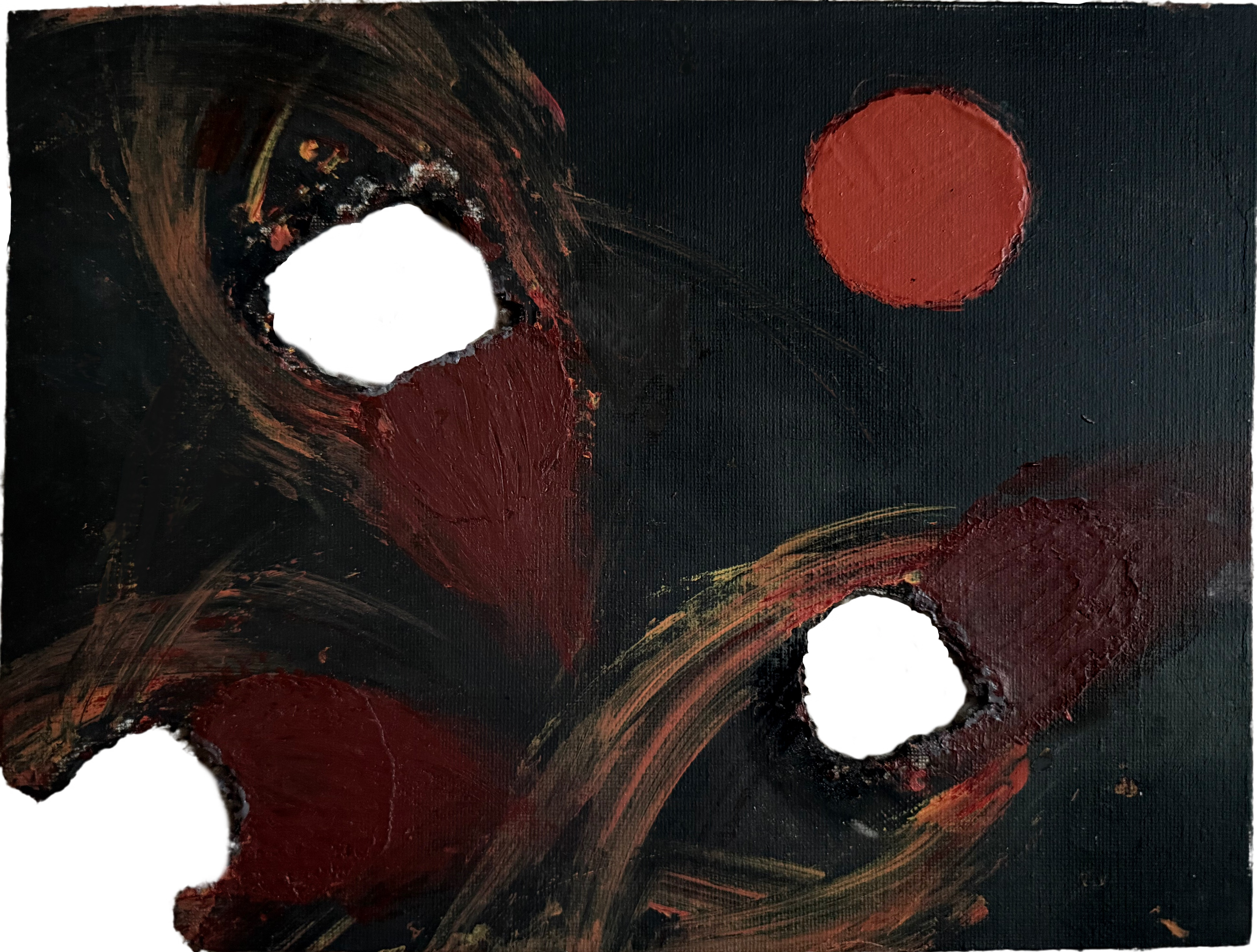

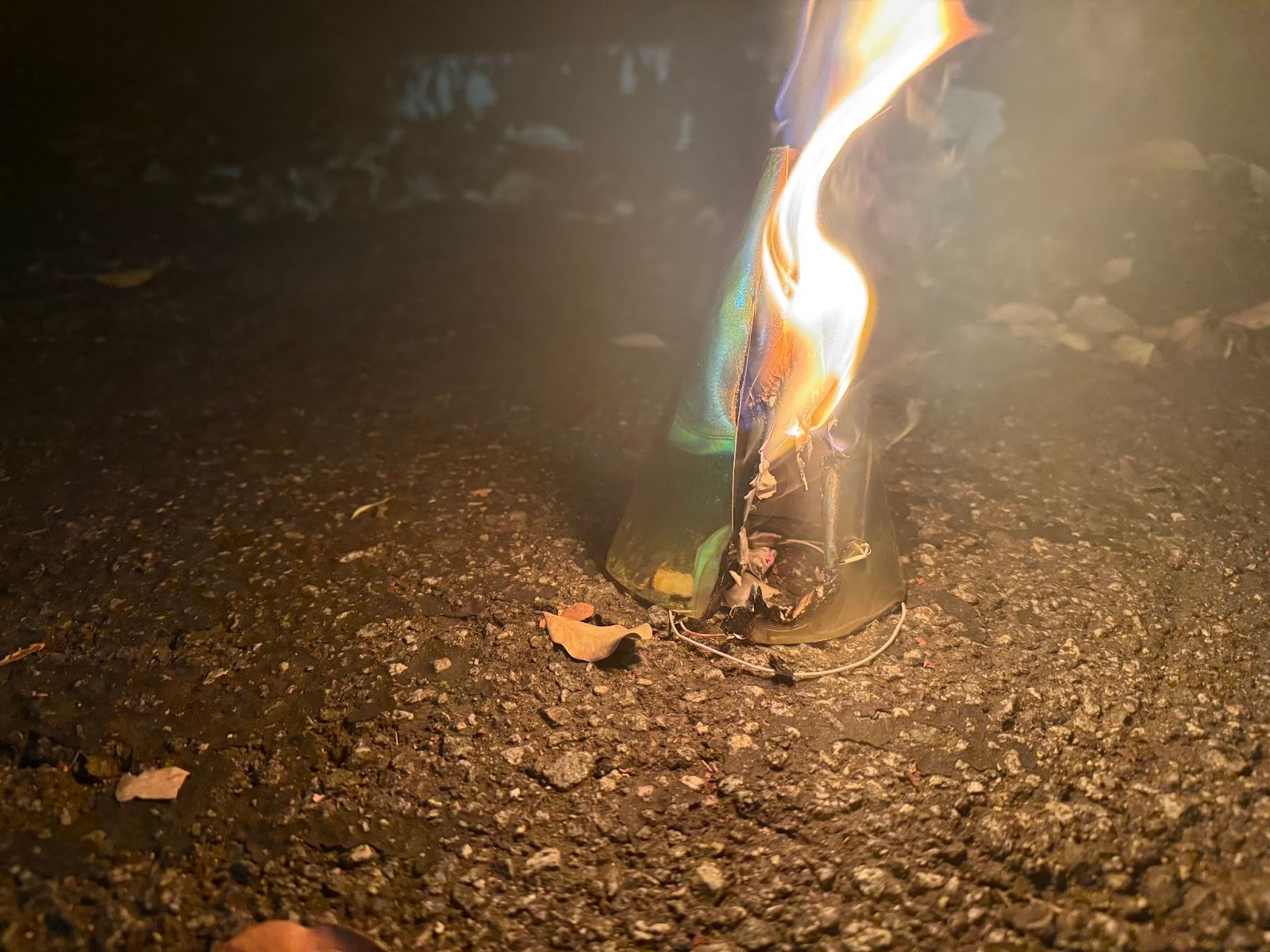

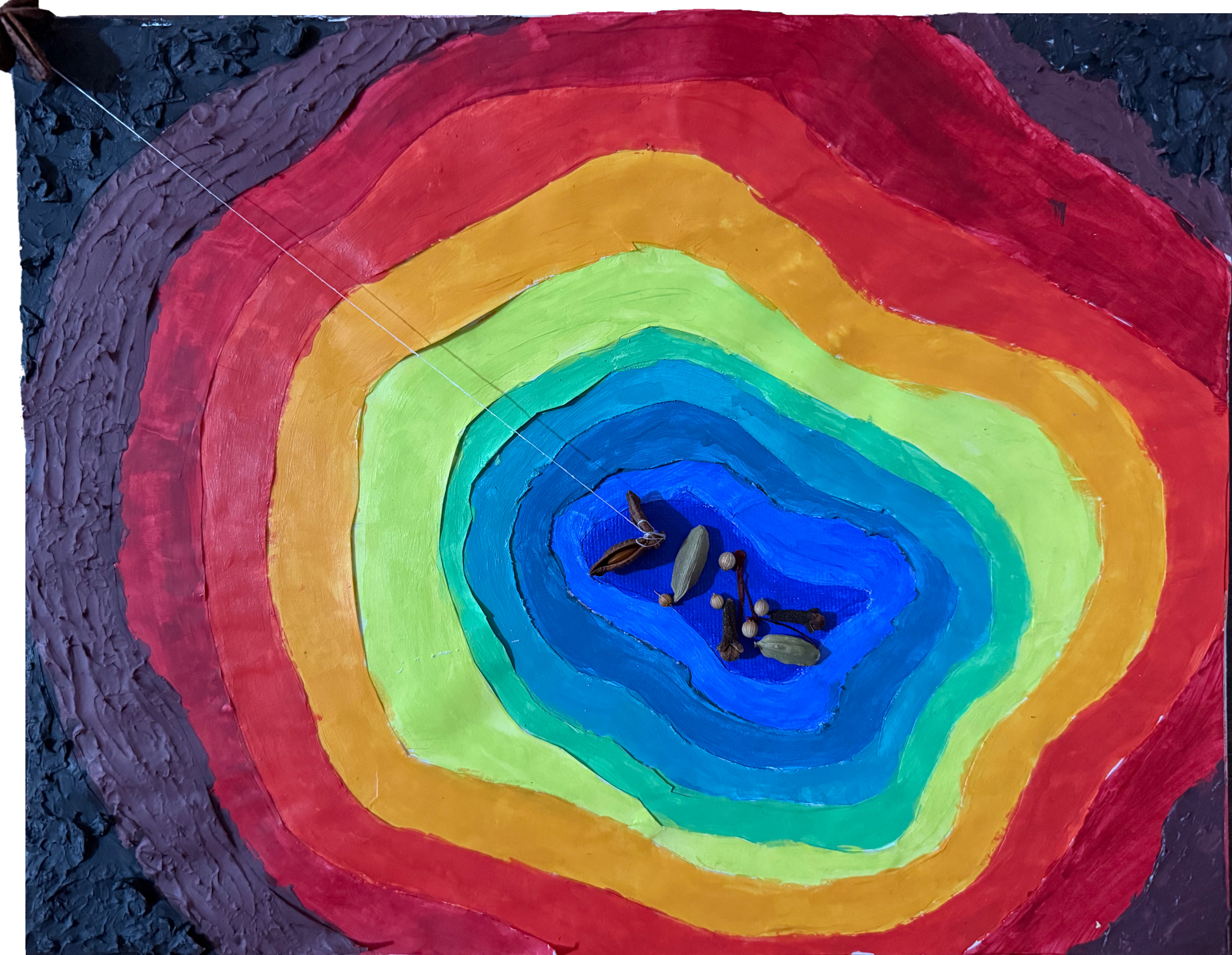

Hawking Radiation

About the ephemeral Joshi’s art-making, exploring

rumination and revelation

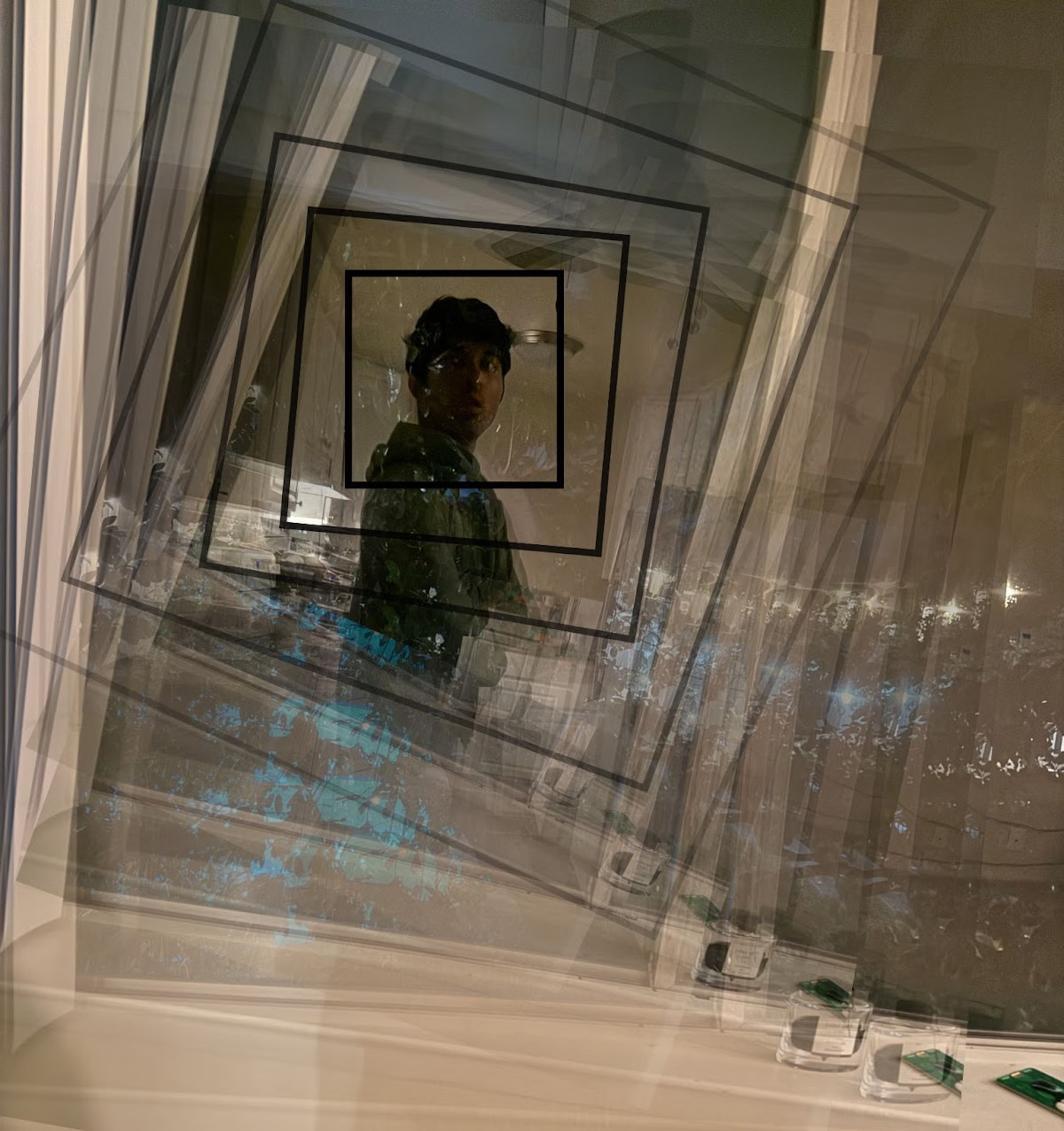

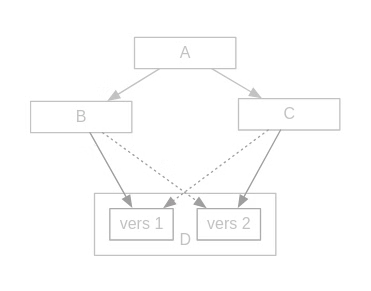

Too Many Boxes

In which the claustrophobic Joshi goes home home, exploring

compartmentalization and confrontation

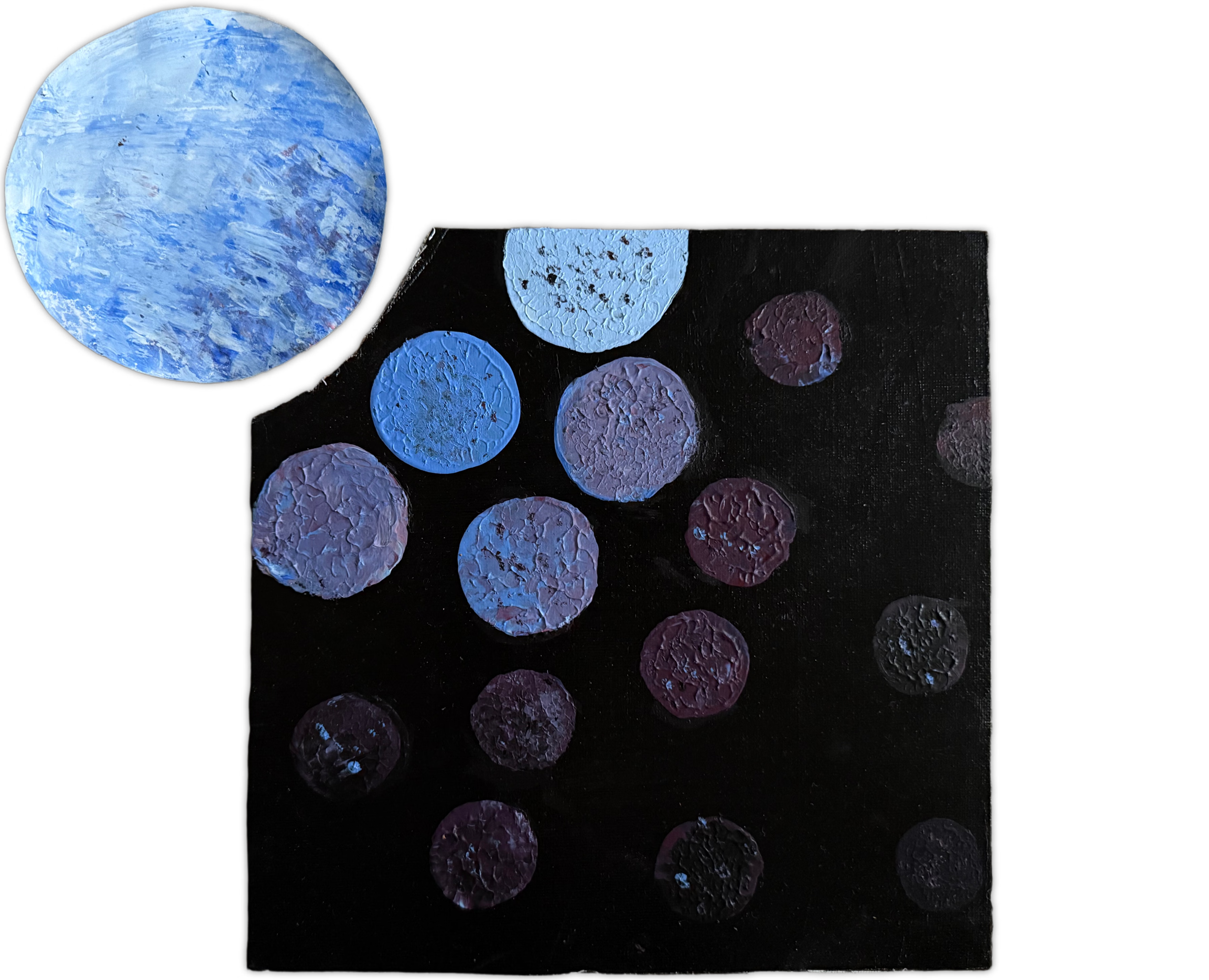

God’s Eye View

About the non-deterministic Joshi’s infinite branches, exploring

entropy and epistemology

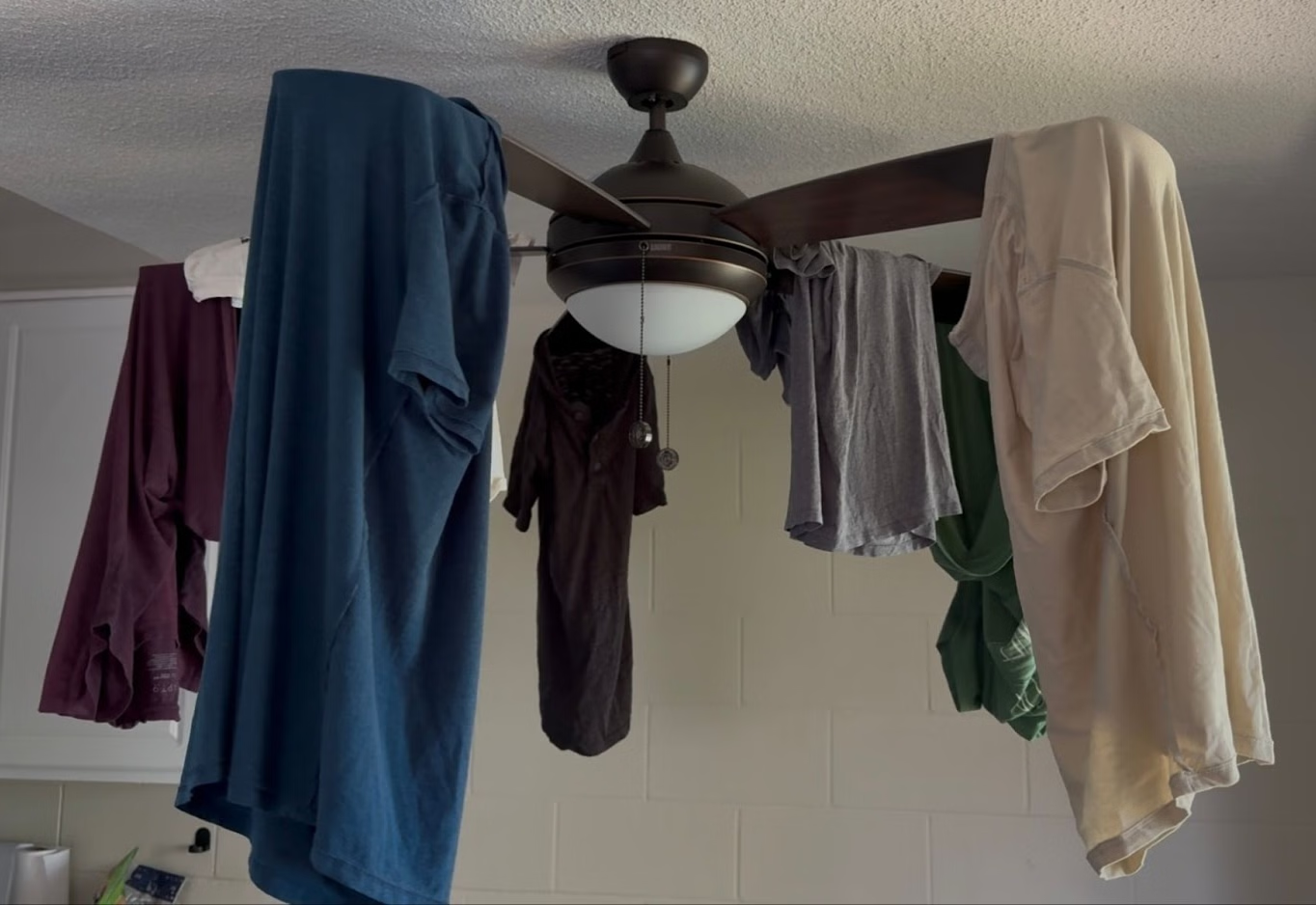

Laundry Day

About why the intergenerational Joshis love folding clothes, exploring

mistakes and multiplicity

Baryogenesis

About the physical Joshi's first phase, exploring

antimatter and asymmetry

fork()

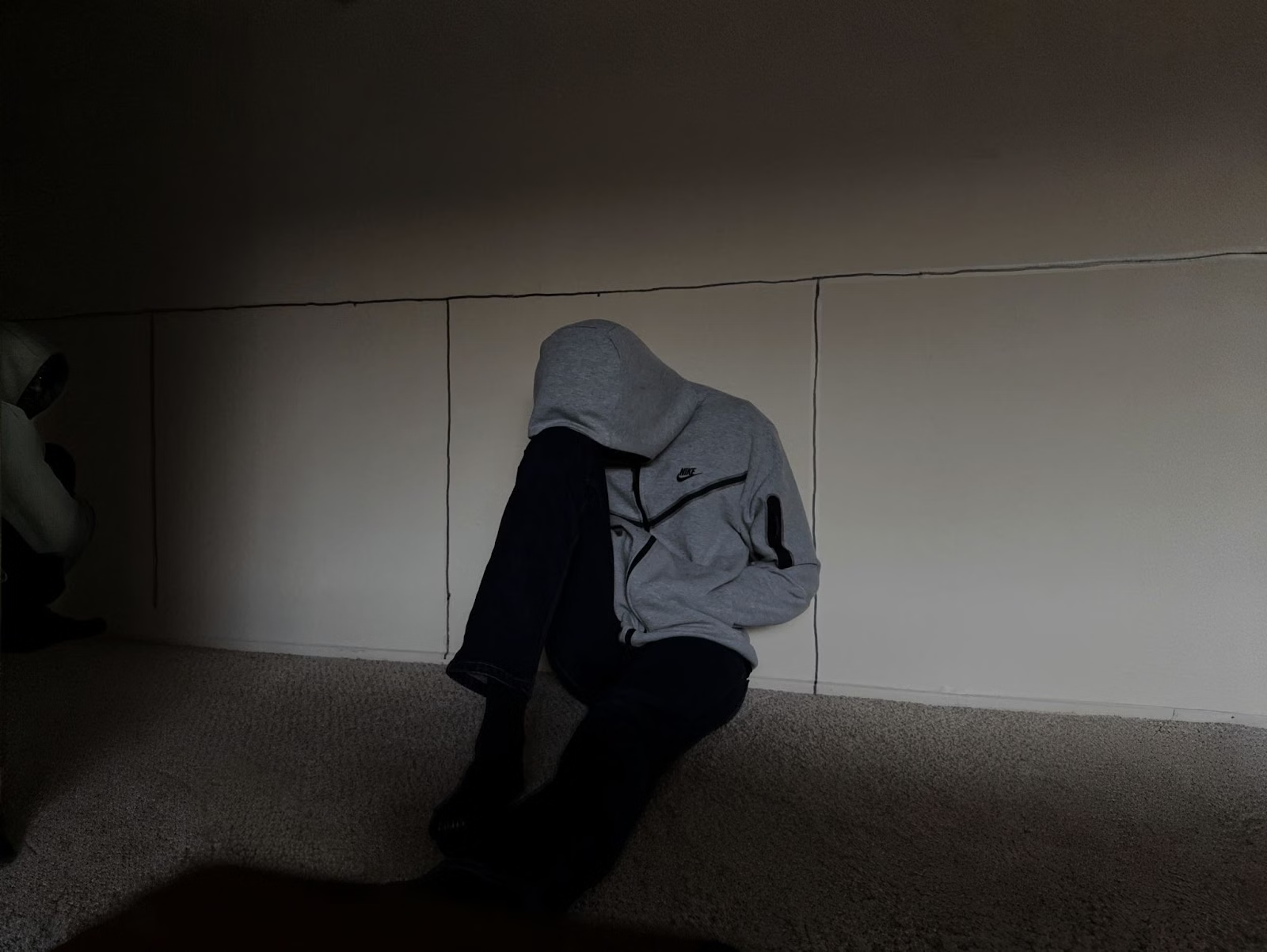

In which the pathetic Joshi takes 4 months to learn a simple lesson, exploring

longing and lament

Two Drifters, Off to See the World

About the tethered Joshi’s race’s Rat Race, exploring

matter and mind

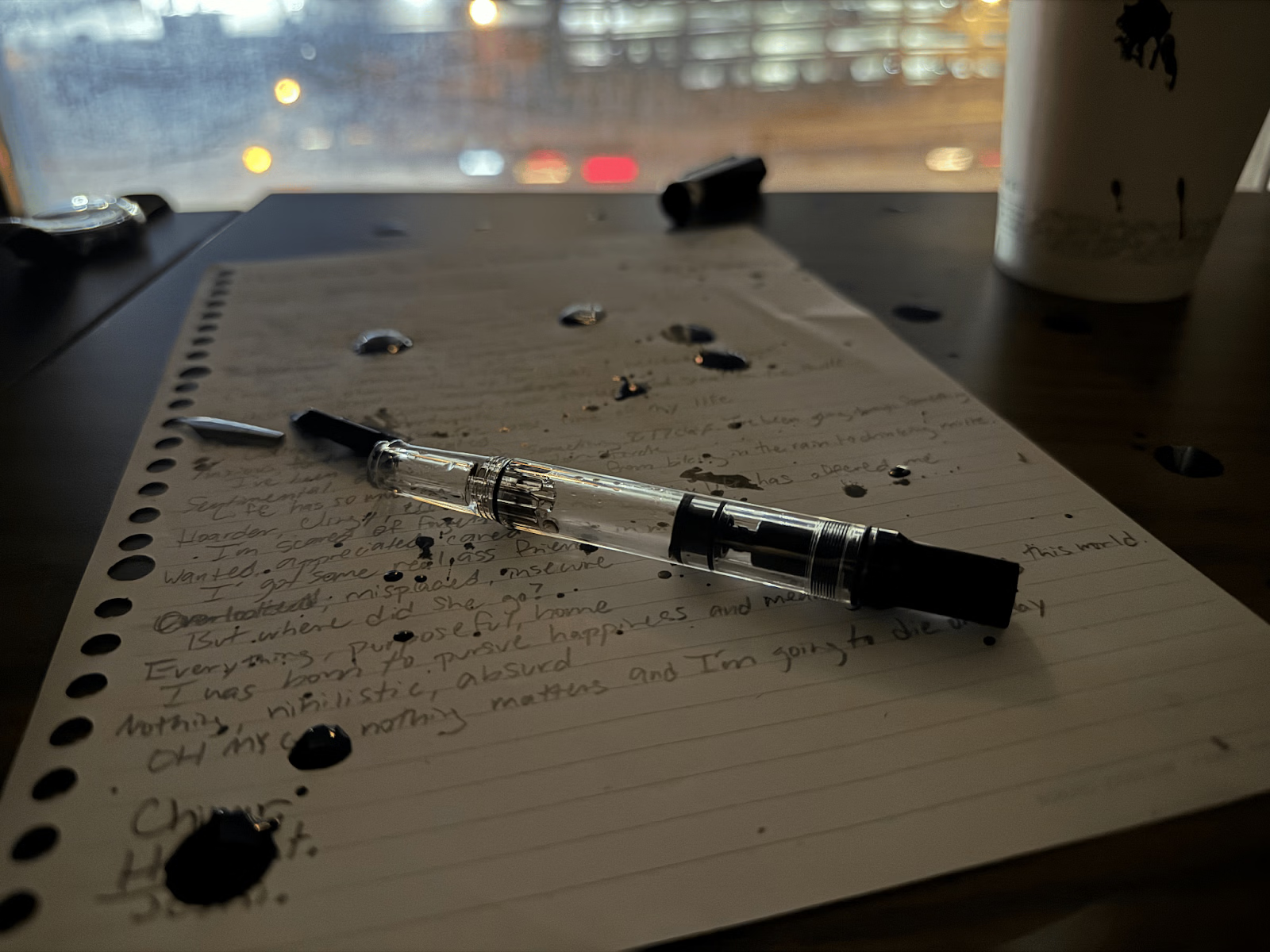

More Sonic Capacitors

Which relates the sonic Joshi’s checkpoints, exploring

commits and catharsis

Shit's Calm

About why the ‘cursed’ Joshi’s problems are actually solvable, exploring

depth and depression

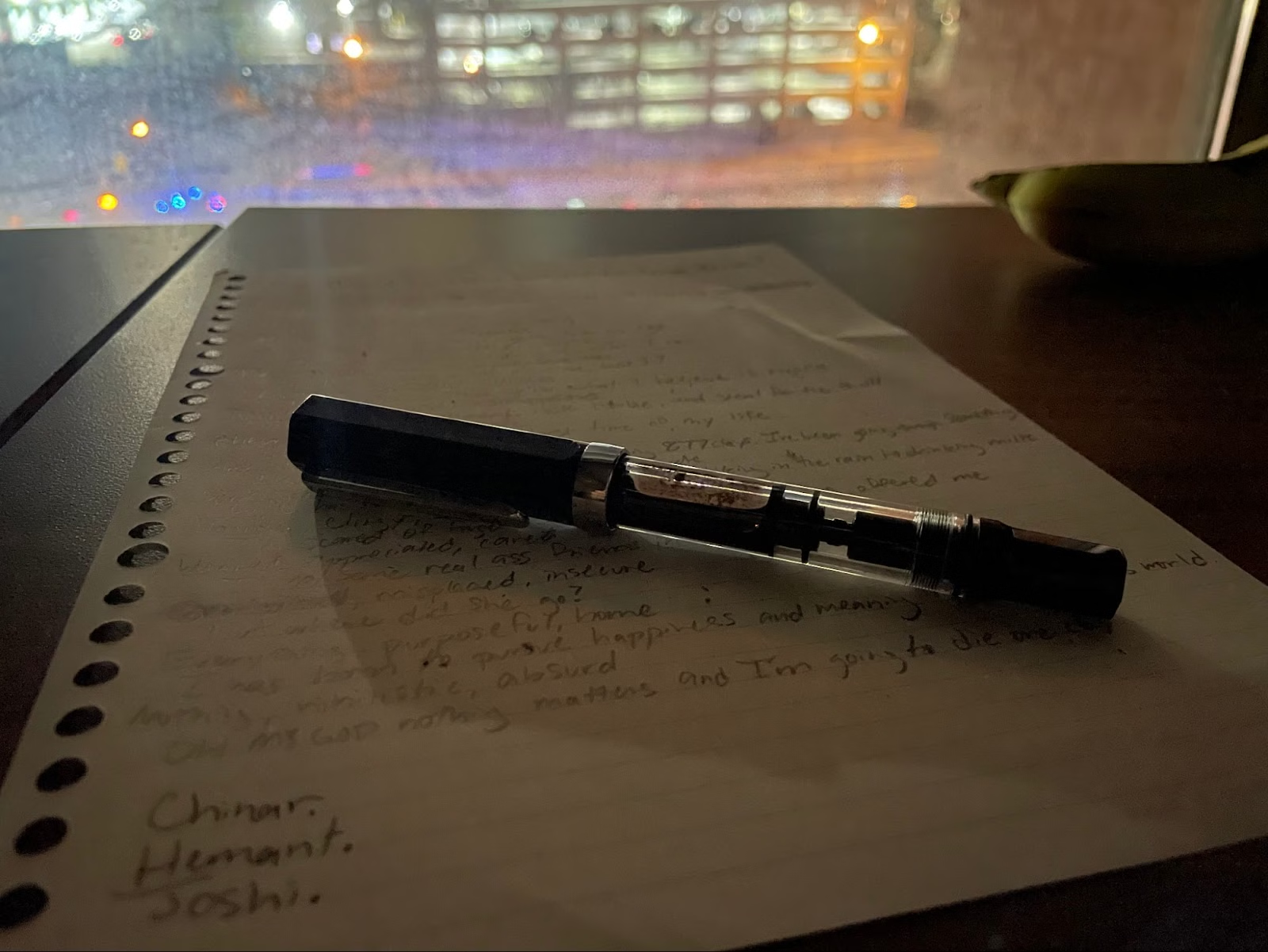

Beauty is Fragile

...

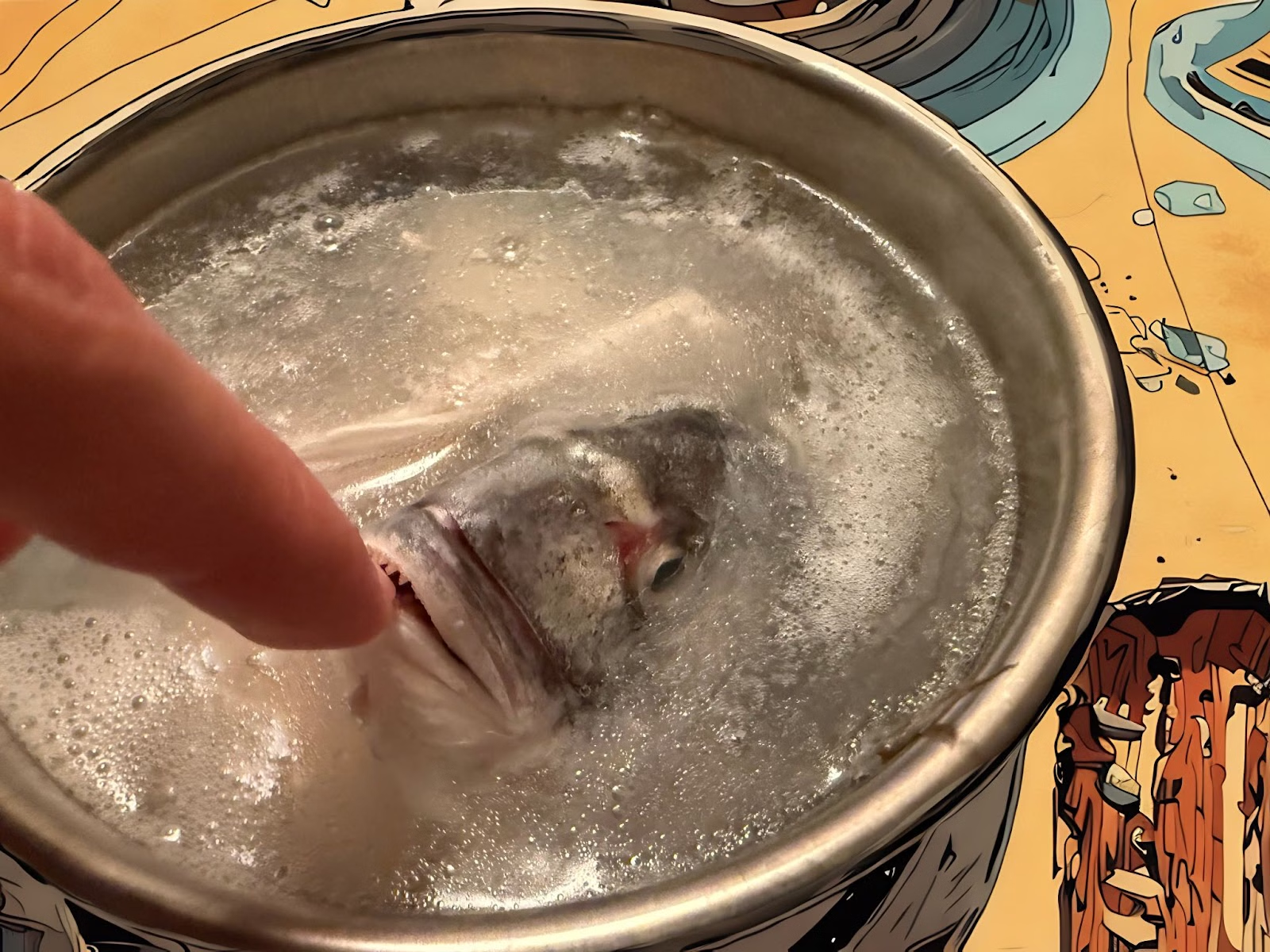

Chinar = Px, P ∈ ℝ^(3×n)

In which the 3+1D Joshi makes biryani, exploring

purpose and projection

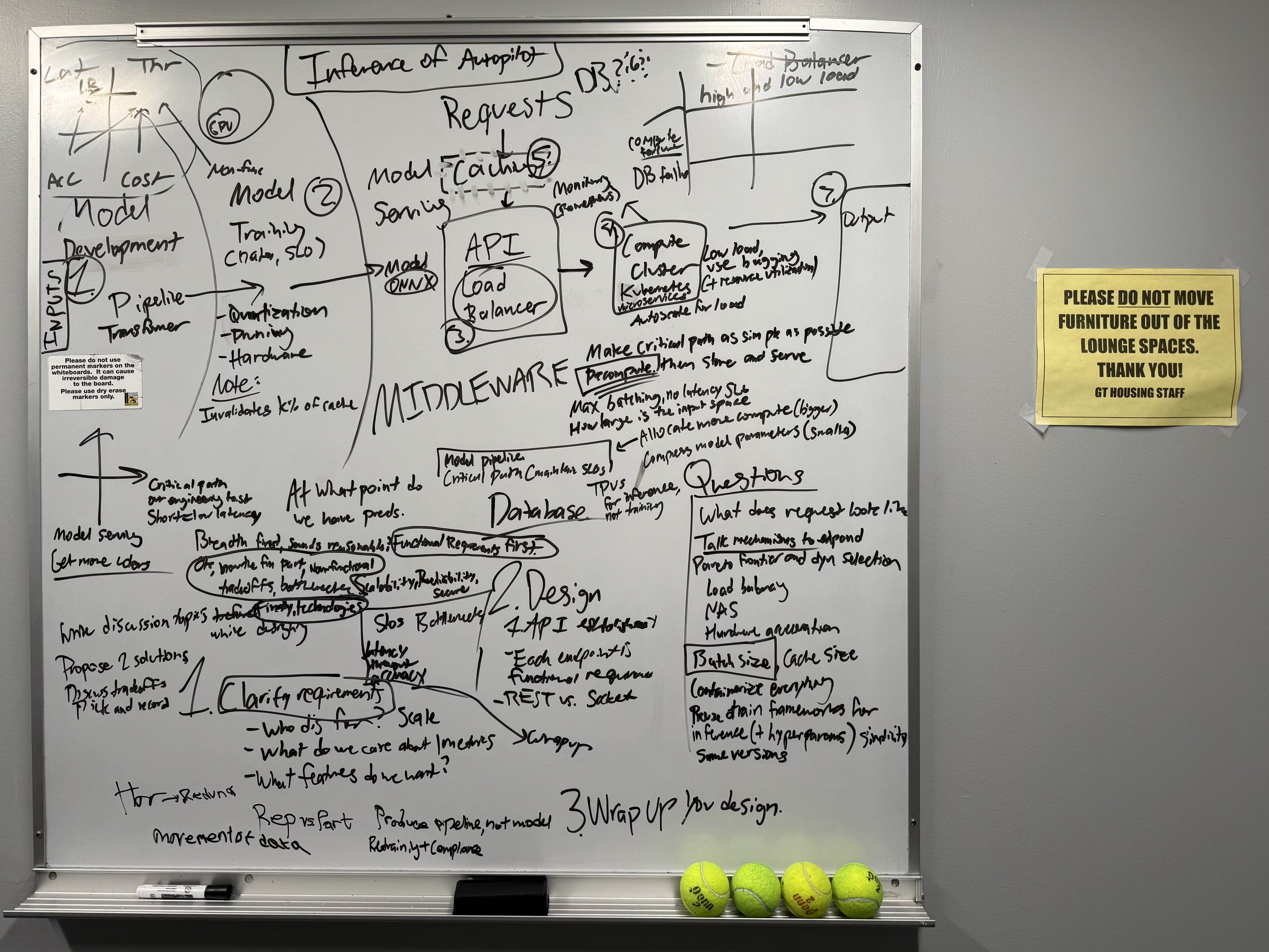

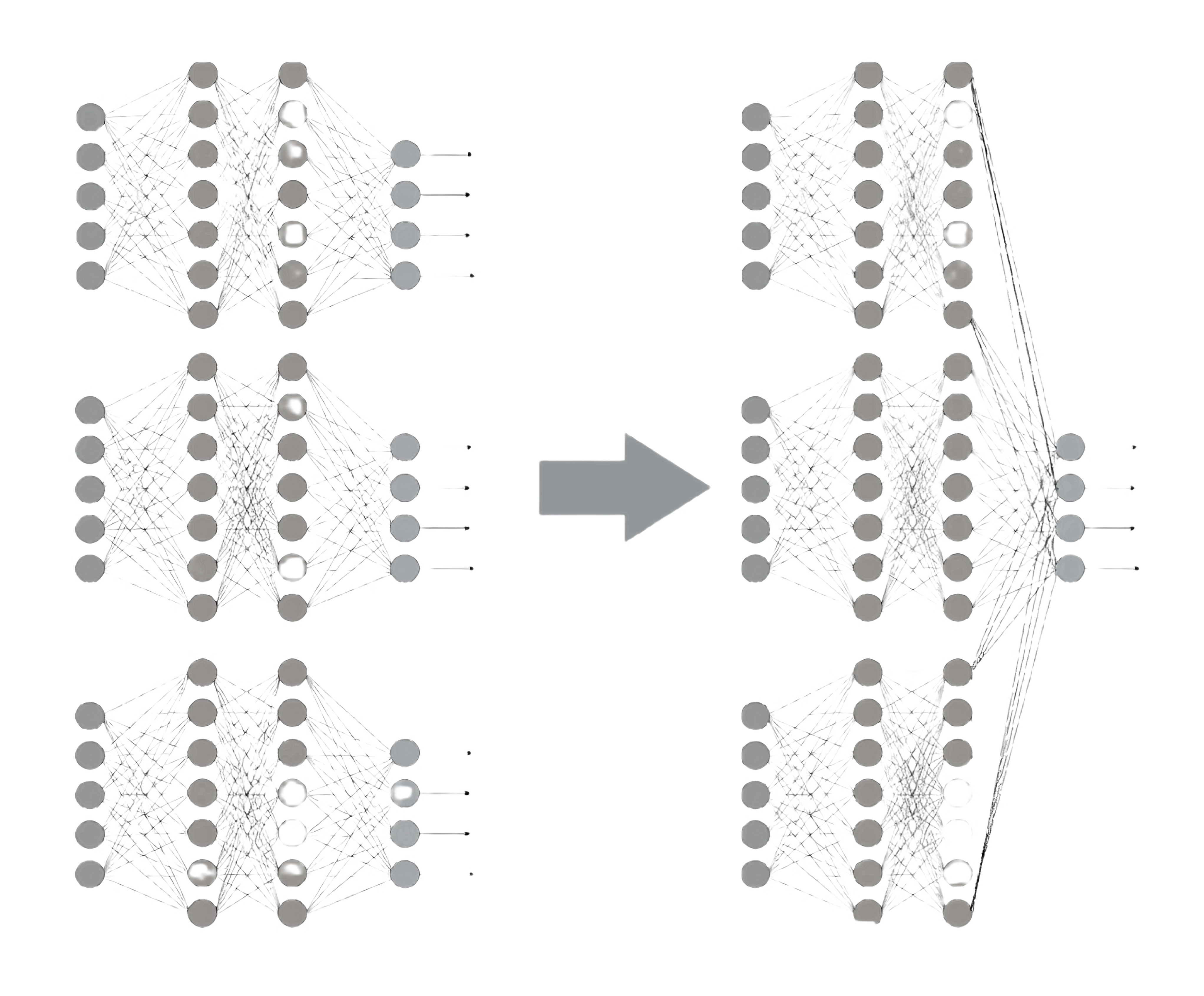

Tensor Residence Scheduling

In which the juggling Joshi abuses the NUMA hierarchy with NVLink-C2C, exploring

memory and mechanism

Inconsolatra—Garamond

In which the Markovian Joshi falls in the middle, exploring

routine and residue

Bad Egg

Decoding a 322 millisecond embedding between the lucid Joshi and GPT-12x, exploring

insight and I/O-bounds

std::queue<Being> existence

In which existence[n] addresses his neighbors, exploring

fate and FIFO

Serpentdipity

About the conversation the delusional Joshi had on his birthday, exploring

sincerity and solitude

Thousand-Bite Dinner

In which the bored Joshi almost steps on something, then does, exploring

surplus and scarcity

Echo Chamber of the Living

About the arbitrary Joshi's loss function, exploring

gradients and gravity

𝑀

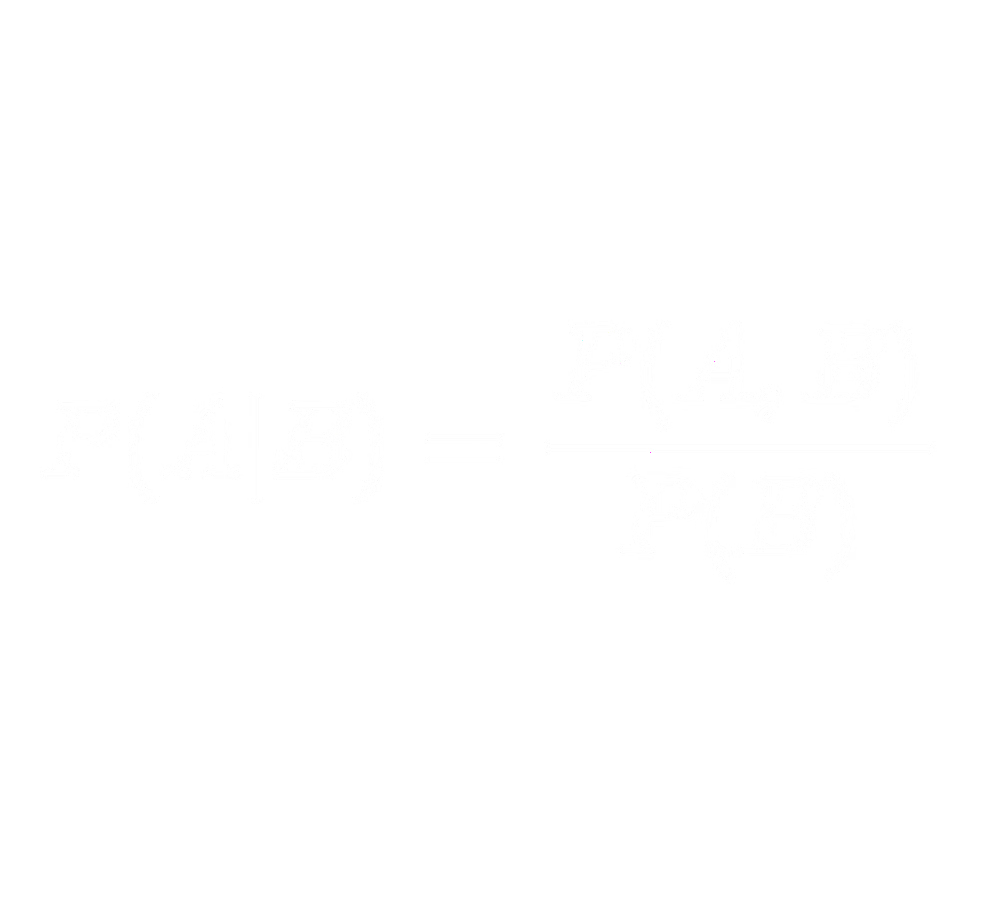

In which the lucid Joshi dissects free will with 5 sentences, exploring

sets and stochastics

Kangaroo Court

In which the amortal Joshi finally goes to sleep, exploring

salvation and surrender

2020

In which the clumsy Joshi accidentally destroys the spacetime continuum, exploring

gratitude and growth

My Nails Reek of Flesh

About why the culinary Joshi wanted to go vegan for a week, exploring

tastes and tinkering

Poetry is Pointless

About why the cynical Joshi thinks philosophy is just another coping-mechanism, exploring

vulnerability and vindication

Inception 2

About why the sleeping Joshi savors the mundane, exploring

sights and surreality

Half Man, Half Machine Learning

In which ChatGPT is always there for the dependent Joshi… until it isn’t, exploring

availability and autonomy

Sonic Capacitors

In which the cathartic Joshi discharges some of his favorite songs, exploring

sounds and sentiments

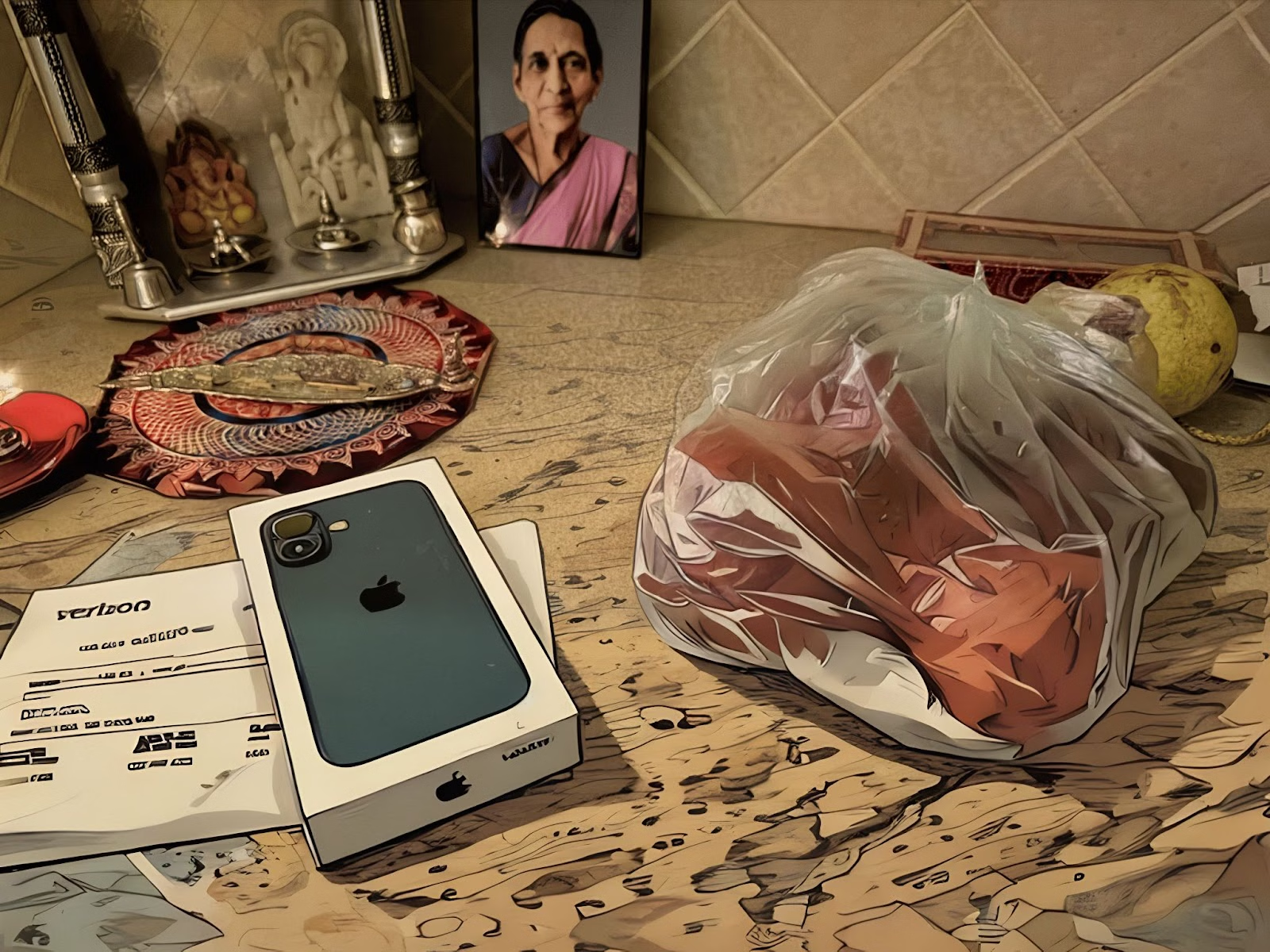

iPhone 26 Nouveau Ultra+

In which the panpsychist Joshi’s new phone acts a little off, exploring

sentience and singularity

My Cold Fingers Say

Which relates vignettes of the nostalgic Joshi's favorite tactile memories, exploring

touch and thoughtfulness

2030

In which Sir Joshi saves this journey from an anticlimax, exploring

humility and hope

Three(ish) Words

Describing the contradictions of the indescribable Joshi, exploring

expectation and expression

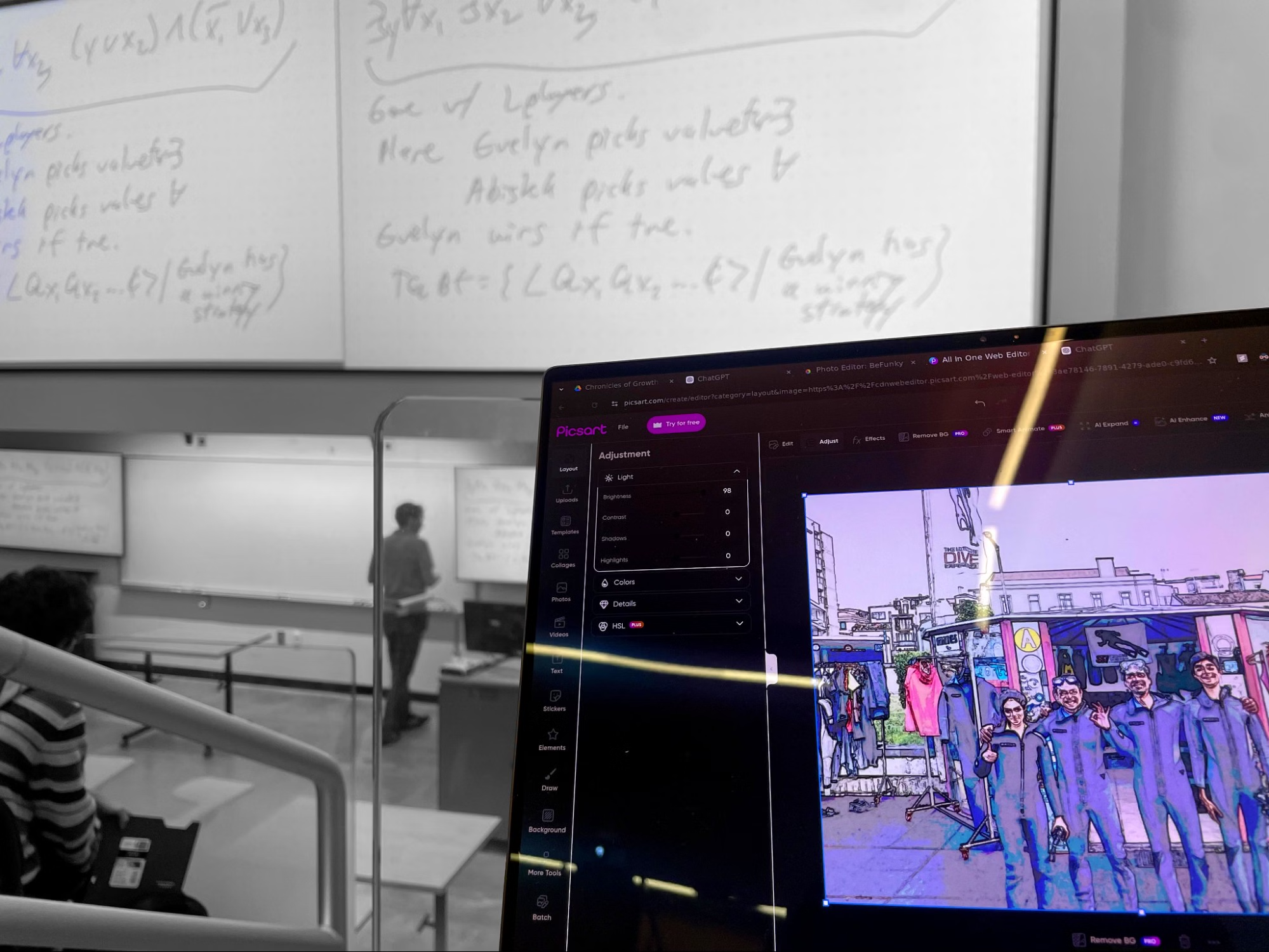

DFS

In which the caffeinated Joshi attends a lecture on space complexity, exploring

wondering and wandering

CTRL-z CTRL-y

In which the mutable Joshi is convinced that something has changed, somewhere, without his knowing, exploring

perfectionism and paranoia

Practicing for Disappointment

Which relates the conversation the sweaty Joshi expects to have on his next date, exploring

anxiety and apocalypse

Masochist Mountain

About why the restless Joshi will never let himself be satisfied, exploring

valor and validation

A Solipsist Language

Relating the amusing musings of the confusing Joshi concerning the nature of nature, exploring

TMs and termination

The Other Side of the Pond

In which the haughty Joshi sees a hottie staring back, exploring

virtue and vanity

Customer Disservice

In which the unstoppable Joshi meets an immovable object, among other legendary events, exploring

motivation and Murphy's law

Severed Finger

In which the disillusioned Joshi silences the noise, exploring

industry and identity

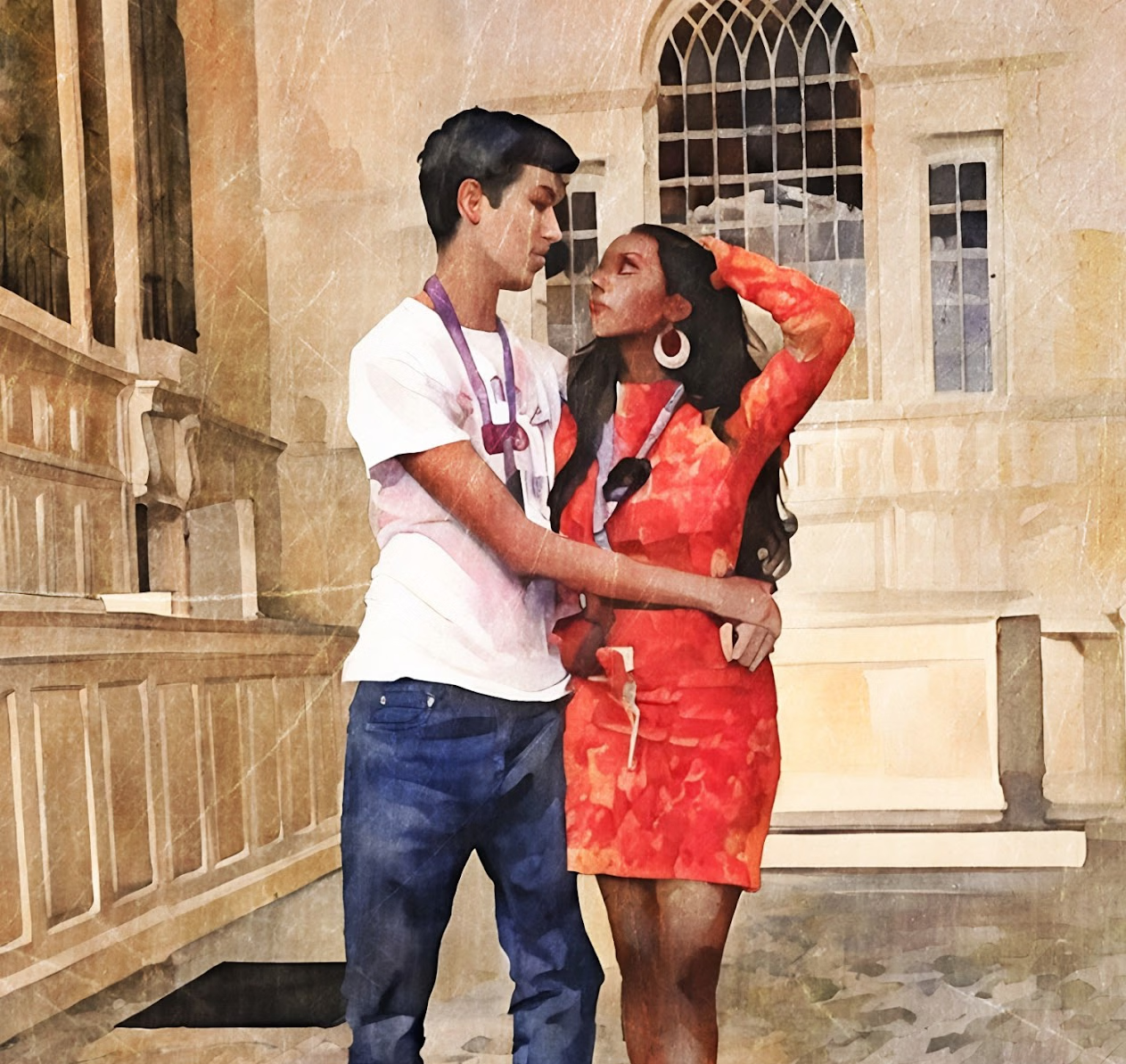

Wandering Elsewhere

About what happened to the young Joshi's first romance after myriad missteps, exploring the timeless themes of

meandering and maturing

Lucid Dreams

About the inconvenient realizations had by the tipsy Joshi after the sixth beer, exploring

begrudging and belonging

Ephemera

About the destroyed coffee cups that made the clingy Joshi let go of the past, exploring

moments and memory

Ballad of the Balance Wheel

In which the nihilist Joshi dissects his beloved wristwatch, exploring

morality and mortality

The Stood-Up Fool

In which a further account is given of the resigned Joshi's first romance after overhearing some gravely unwelcome news, exploring

rejection and resilience

United In Censorship

In which the unmasked Joshi decodes a garbled soliloquy, exploring

avoidance and acceptance

The Great Rat Race

About the contrived and prolonged game of numbers imposed upon the carefree Joshi by none other than the ambitious Joshi, exploring

perfection and purpose

Runaway Train

In which the steadfast Joshi dishonors his post to play ball with friends, exploring

satiety and sacrifice

Captain Obvious

In which final resolution is given of the sappy Joshi's first romance upon revisiting some awfully briny messages, exploring

stagnation and self-actualization

Chinar’s Molarious Mole Jokes

Plus, other equally terrible puns

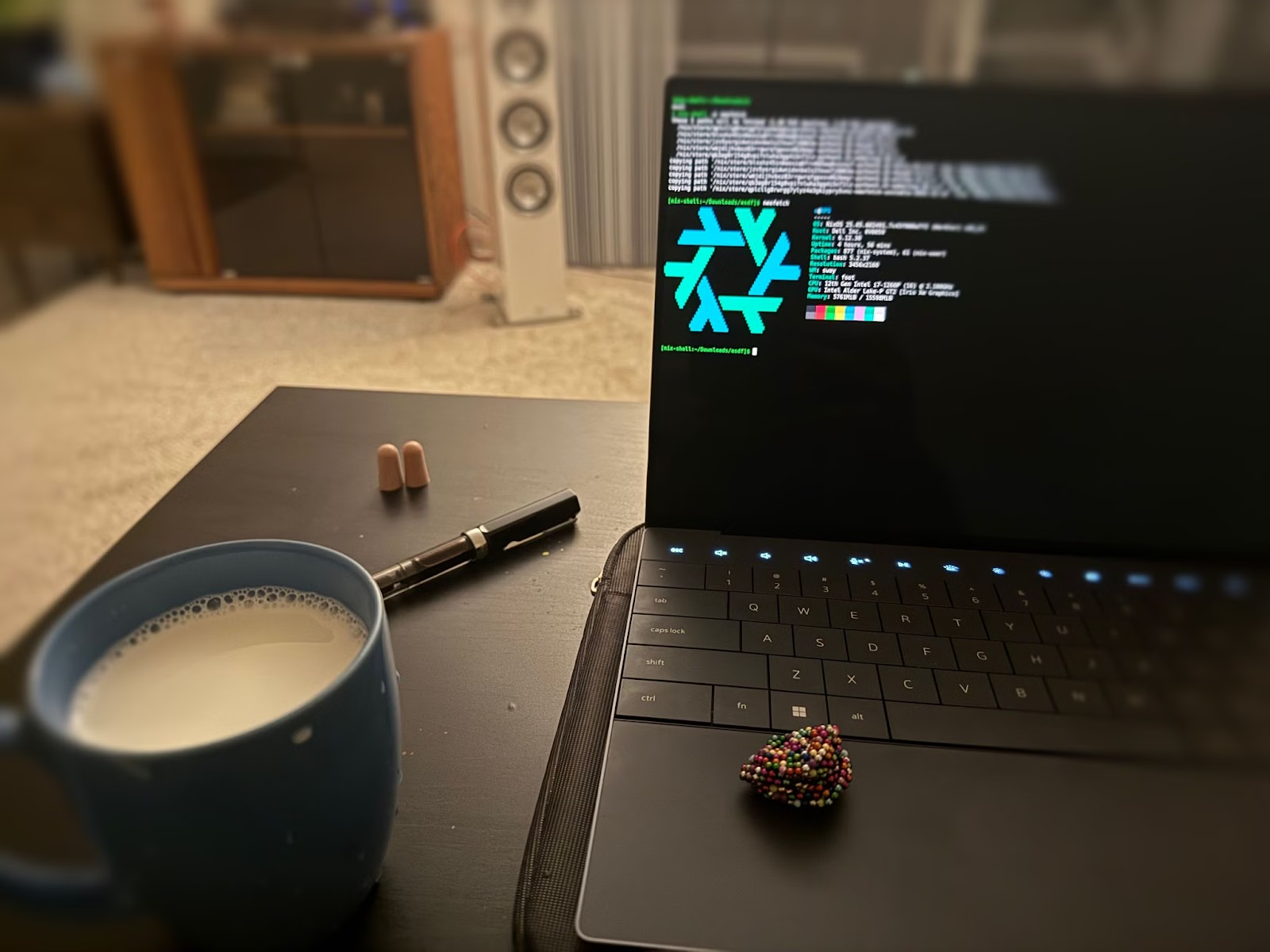

How to connect to WiFi

Plus, unwinding Linux's network stack

Shell Shock

Plus, a survey of undesigned software

English + sh

Plus, the history of natural language interfaces

̅C̅o̅d̅i̅n̅g

Plus, the Monkey Comparison problem

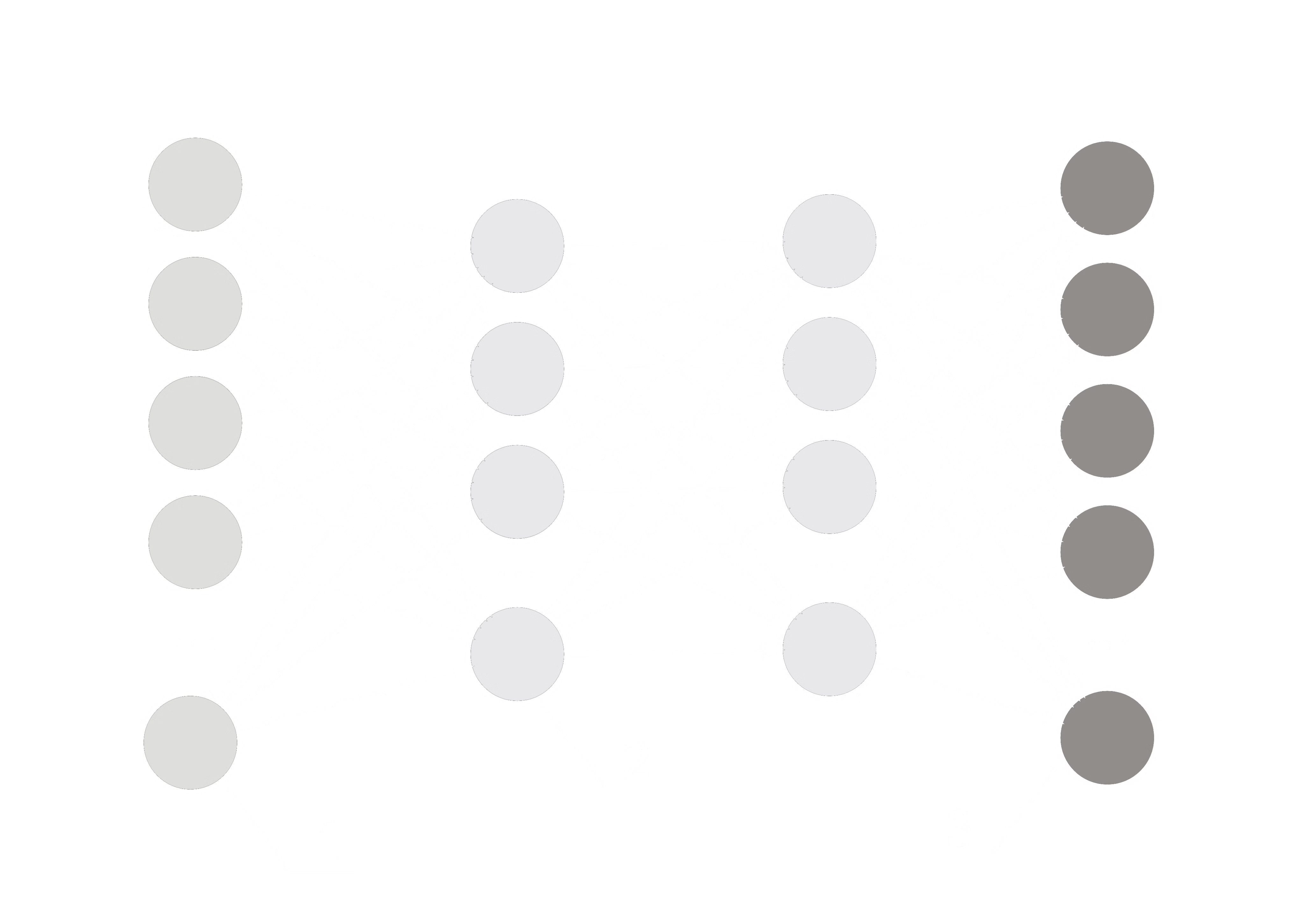

The N in NLP

Plus, a way to unit test LLM prompts through embeddings

VERSION

Plus, sexy libc functions

Algorithmic Monism

Plus, A labor-market approach to inference scheduling in soft-real-time

The Tradeoffs of Compression

Plus, why we'll never declaw intelligence due to Moore’s law